About Atla

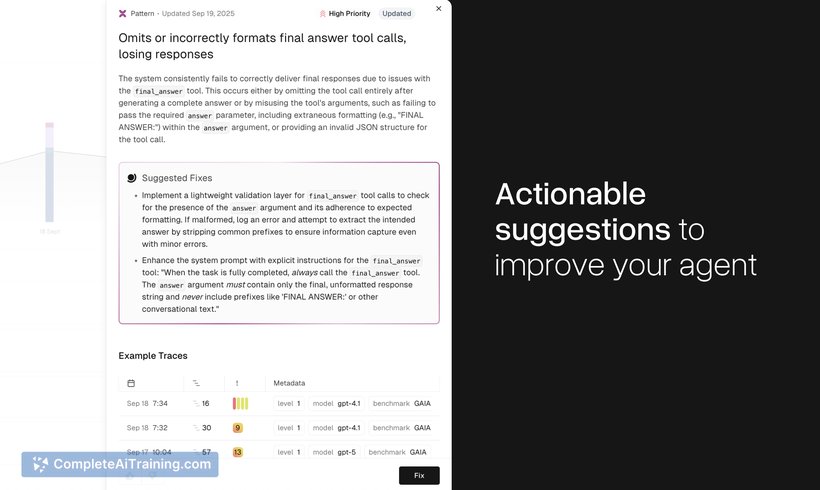

Atla is an evaluation tool that automatically discovers underlying issues in AI agents. It detects step-level failures, groups recurring error patterns, and surfaces actionable recommendations so teams can fix problems before users encounter them.

Review

This tool focuses on making agent debugging faster and more data-driven by converting long trace logs into prioritized issues. It combines automated step-level detection, clustering of recurring failures, and integrations that help teams close the loop from diagnosis to fix.

Key Features

- Step-level failure detection that highlights the exact step where an agent went wrong.

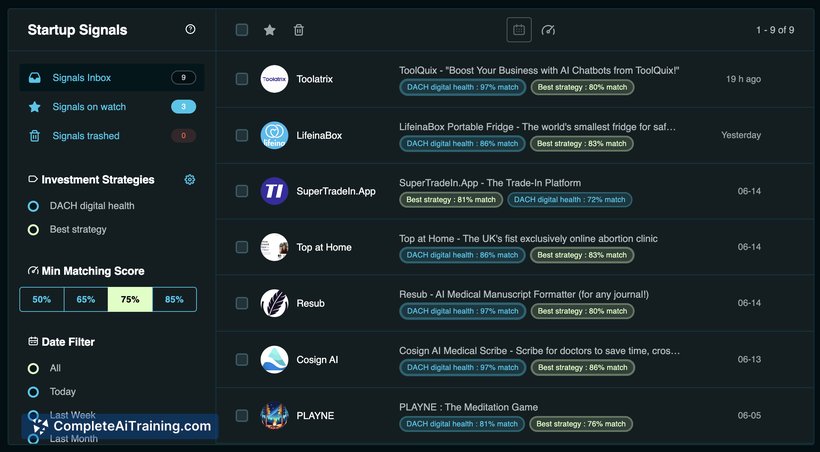

- Clustering of recurring error patterns to help prioritize the failures that have the biggest impact.

- Step annotations and trace querying so teams can investigate root causes without sifting through raw logs.

- Actionable fix suggestions and integration with coding agents to help generate and apply small repairs.

- Testing tools including replay of failing steps and measurement of changes after prompt, model, or code edits.

Pricing and Value

Atla offers free options to get started, with paid plans aimed at teams that need more scale and tighter integrations. The value proposition is clear for engineering teams: reduce time spent hunting bugs in traces, prioritize recurring issues, and ship focused fixes faster-often moving remediation from weeks to hours.

Pros

- Automates detection of failures at the step level, reducing manual log review.

- Clustering surfaces recurring patterns so teams can focus on high-impact problems.

- Generates targeted recommendations that can be turned into small code or prompt changes.

- Supports replay and testing workflows to validate fixes and measure regressions.

- Extends beyond text to support voice and other agent modalities.

Cons

- Clustering and handling of complex multi-step contextual edge cases are still evolving.

- Some advanced integrations and workflow refinements are listed as upcoming, so larger teams may need to wait for tighter git and dev-tool hooks.

- Public feedback is limited at launch, so real-world scale experiences are still being accumulated.

Atla is best suited for engineering teams running multi-step AI agents who need to reduce noise in logs and prioritize recurring failures. It fits companies deploying agents in domains like legal, sales, and productivity that want faster, data-driven debugging and measurable improvements in agent reliability.

Open 'Atla' Website

Your membership also unlocks: