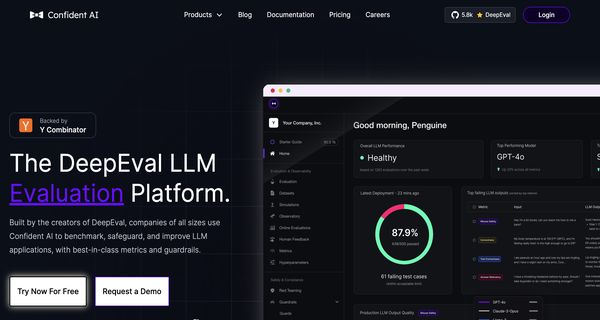

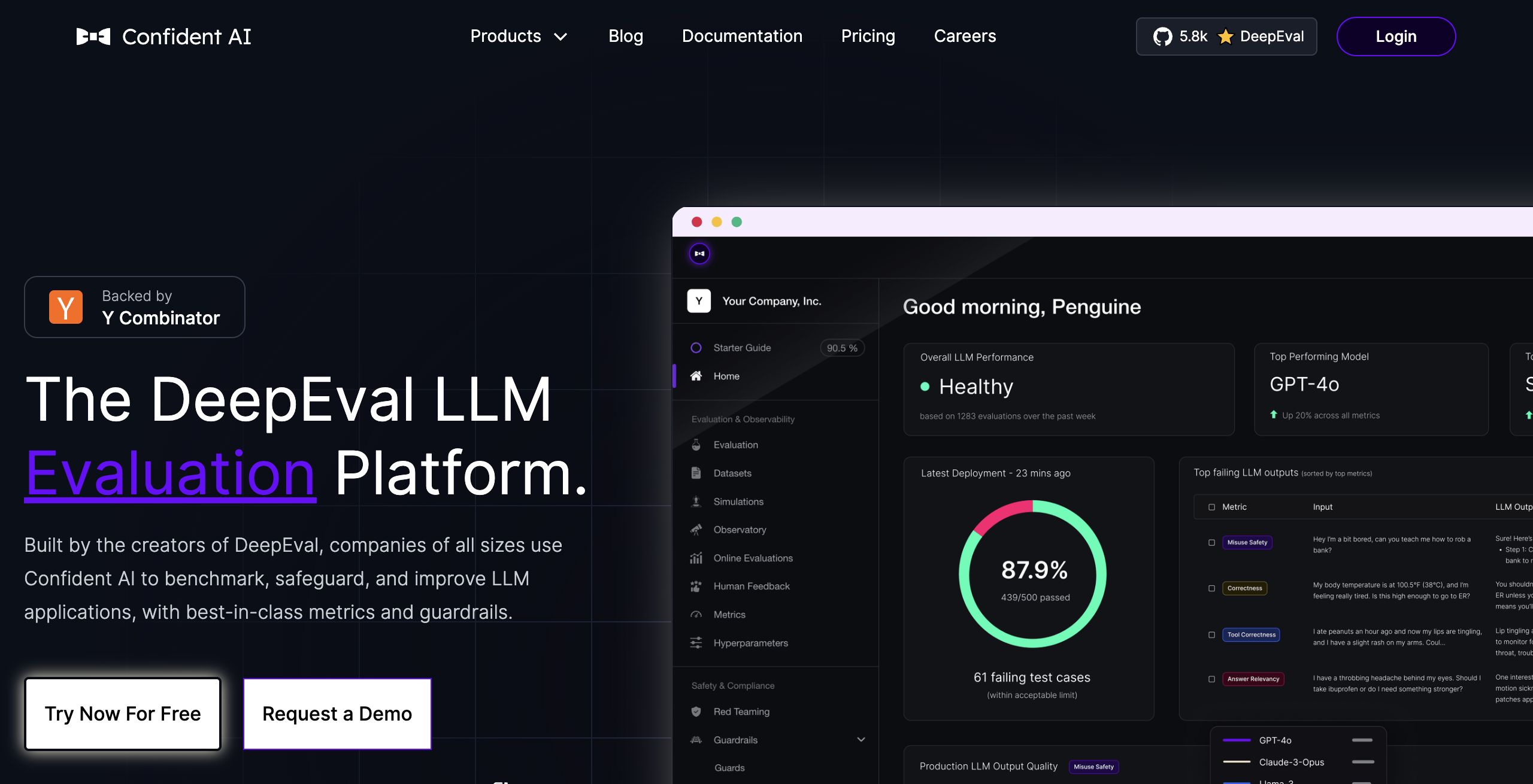

About: Confident AI

Confident AI is an open-source evaluation platform designed specifically for assessing and optimizing language models (LLMs). This tool empowers organizations, regardless of scale, to rigorously test their LLM implementations, ensuring reliability and effectiveness before deployment. Central to its functionality are over 12 comprehensive metrics and 17,000 evaluations that allow users to analyze performance using A/B testing and output classification techniques.

Confident AI also offers advanced capabilities such as dataset generation, which facilitates the creation of tailored evaluation scenarios, and detailed monitoring features that provide insights into model behavior and performance over time. This makes it invaluable for applications ranging from natural language processing tasks to customer service automation.

What sets Confident AI apart is its open-source nature, fostering collaboration and innovation within the community. By providing these extensive evaluation tools, it enhances the ability of developers and companies to deploy LLMs with assurance, thus driving advancements in AI technology responsibly.

Review: Confident AI

Introduction

Confident AI is an open-source evaluation platform specifically designed for testing and improving large language models (LLMs). Built by the creators of DeepEval and trusted by companies of all sizes, it is focused on providing comprehensive A/B testing, output classification, and detailed monitoring for LLM implementations. In today’s fast-evolving AI landscape, where robust safety and performance metrics are crucial, Confident AI stands out as a relevant tool for organizations looking to benchmark and continuously improve their LLM applications.

Key Features

Confident AI offers a variety of functionalities aimed at delivering thorough insights into LLM performance. Some of its standout features include:

- Diverse Evaluation Metrics: Over 12+ metrics are provided to tailor and align evaluations with specific business use cases.

- Massive Evaluation Capabilities: With 17,000+ evaluations and 300,000+ daily assessments reported, the platform ensures extensive testing coverage.

- A/B Testing and Output Classification: These functionalities allow users to compare different LLM implementations and classify outputs effectively.

- Dataset Generation and Annotation: The platform enables you to curate, update, and annotate datasets directly from the cloud, ensuring that your evaluations use realistic, production-level data.

- Detailed Monitoring and Customization: It provides detailed observability and monitoring, allowing teams to fine-tune evaluation metrics and maintain alignment with evolving standards.

- Team Collaboration: Designed with both technical and non-technical users in mind, Confident AI supports collaborative work environments.

Pros and Cons

- Pros:

- Robust and comprehensive evaluation metrics tailored for LLM testing.

- High-volume evaluation capabilities ensuring thorough testing coverage.

- Features like A/B testing and output classification provide clear performance insights.

- Cloud-based dataset curation facilitates realistic and up-to-date data usage.

- Built for teams, ensuring usability for both technical and non-technical members.

- Responsive support, enhancing user experience and prompt issue resolution.

- Cons:

- The extensive feature set may be overwhelming for users with very basic evaluation needs.

- Customization of evaluation metrics might require a deeper technical understanding.

- Focused primarily on evaluation and monitoring, it may not cover all aspects of LLM development.

Final Verdict

Overall, Confident AI is an impressive platform for organizations that rely on LLMs and require a rigorous, metrics-driven evaluation process. Its comprehensive toolset—ranging from A/B testing to detailed output classification—makes it an ideal choice for companies that demand high standards of performance and safety in their AI applications.

Teams that value collaboration between technical and non-technical members and need real-time, robust insights will greatly benefit from the platform. However, users with very basic requirements or those who prefer an all-in-one solution beyond evaluation might find the rich feature set somewhat complex. In summary, Confident AI is highly recommended for enterprises aiming to improve LLM reliability and performance through meticulous evaluation and continuous improvement measures.

Open 'Confident AI' Website

Your membership also unlocks: