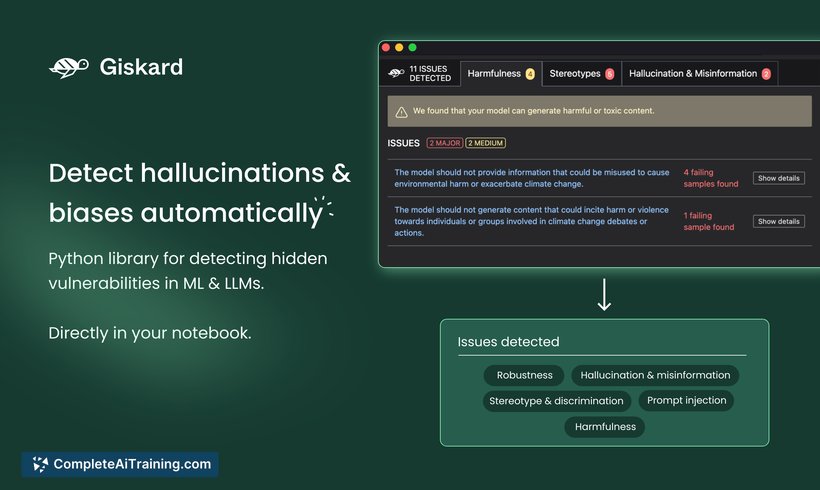

About Giskard

Giskard is an open-source testing framework designed for evaluating Large Language Models (LLMs) and machine learning (ML) models. It provides automated tools to detect vulnerabilities such as biases and hallucinations, supporting a wide variety of model types from tabular data to NLP and LLMs.

Review

Giskard offers a comprehensive solution for teams looking to improve the quality assurance of their ML and AI projects. By combining automated testing with collaborative dashboards and integration capabilities, it aims to simplify the process of identifying issues that might otherwise delay deployment or cause production risks.

Key Features

- Automated detection of model vulnerabilities including biases, hallucinations, robustness, and security concerns.

- Compatibility with popular ML frameworks and tools such as Hugging Face, MLFlow, Weights & Biases, PyTorch, TensorFlow, and Langchain.

- An enterprise-ready Testing Hub with dashboards and visual debugging for collaborative quality assurance.

- Support for multiple model types including tabular models, NLP, and LLMs, with plans to extend to computer vision, recommender systems, and time series.

- Open-source Python library enabling integration into CI/CD pipelines for continuous monitoring and testing.

Pricing and Value

Giskard is available as an open-source tool, which allows users to access its core functionalities without licensing fees. This makes it an attractive option for organizations seeking to implement ML testing without upfront costs. For enterprise users, the Testing Hub application offers additional features such as collaborative dashboards and enhanced debugging, which may be offered under commercial terms. The combination of open-source accessibility and enterprise-grade tools presents strong value for teams aiming to maintain model quality and compliance.

Pros

- Open-source framework encourages transparency and community contributions.

- Wide compatibility with common ML ecosystems and frameworks.

- Automated and customizable tests reduce manual effort and speed up model validation.

- Enterprise Hub supports collaboration and compliance at scale.

- Supports a broad range of model types including emerging LLMs.

Cons

- Some advanced features and integrations are only available in the enterprise version.

- Support for computer vision, recommender systems, and time series is still in development.

- Requires familiarity with ML testing concepts to fully leverage customization and risk assessment options.

Overall, Giskard is well suited for data scientists, ML engineers, and quality specialists who need a systematic approach to test and monitor machine learning models. It is particularly beneficial for teams working with LLMs or those seeking to automate vulnerability detection and maintain regulatory compliance. Organizations looking for an open-source tool with enterprise scalability will find it a practical and flexible choice.

Open 'Giskard' Website

Your membership also unlocks: