About Hathora

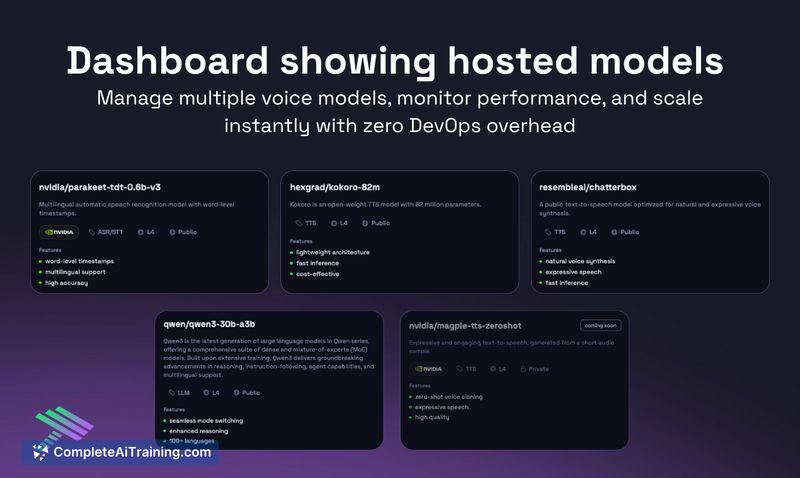

Hathora is a managed platform for deploying, testing, and running voice AI models with minimal DevOps. It provides shared endpoints for quick experimentation and the option to upgrade to dedicated infrastructure for privacy, compliance, or VPC requirements.

Review

Hathora focuses on simplifying the deployment and global delivery of speech models, supporting both open-source and closed models. The service emphasizes low network latency by operating in multiple regions and offers tooling to fine-tune and host custom containers as projects scale.

Key Features

- Instant shared endpoints for quick testing and early development without managing servers.

- Option to upgrade to dedicated infrastructure for privacy, compliance, and VPC needs.

- Global deployment across 14 regions to target sub-50ms network latency for inference.

- Bring-your-own-models or deploy custom Docker containers; includes a model marketplace and fine-tuning on managed infrastructure.

- Flexible GPU billing: API usage can include bundled GPU costs, while dedicated endpoints may bill for GPU consumption separately.

Pricing and Value

Hathora offers a free option and promotional periods (for example, a one-month free trial has been listed). The pricing model distinguishes between shared endpoints-where infrastructure and GPU costs can be bundled into API usage-and dedicated endpoints, where GPU consumption can be billed separately. The provider claims material cost savings versus certain closed providers when hosting your own models, which may make this attractive for teams with sustained inference needs. That said, dedicated infrastructure and heavy GPU usage can still raise overall costs, so teams should estimate usage patterns before committing.

Pros

- Quick onboarding via shared endpoints reduces time spent on infrastructure setup.

- Low-latency global footprint suitable for realtime voice applications.

- Supports bring-your-own-models and custom containers, offering flexibility in model choice and tuning.

- Managed fine-tuning and a model marketplace help accelerate development without building orchestration from scratch.

Cons

- As a recently launched product, the model catalog and community ecosystem are still growing, which may limit immediate out-of-the-box options.

- Pricing distinctions between bundled API usage and dedicated GPU billing can be confusing and may require careful cost modeling for large deployments.

- Real-world performance and consistency across regions will depend on specific models and workloads; independent benchmarking is recommended.

Overall, Hathora is well suited for developers and teams building realtime voice agents who want to minimize DevOps overhead, achieve low-latency inference, and retain flexibility to bring custom or open-source models. Teams that require extensive model choices today or that need fully self-managed infrastructure may want to evaluate the platform alongside hosted and self-hosted alternatives before deciding.

Open 'Hathora' Website

Your membership also unlocks: