About Langfuse Prompt Experiments

Langfuse Prompt Experiments is an open source platform designed to assist developers and teams in refining and optimizing their large language model (LLM) applications. It offers tools to test various prompt versions and models across large datasets, enabling data-driven improvements in LLM performance.

Review

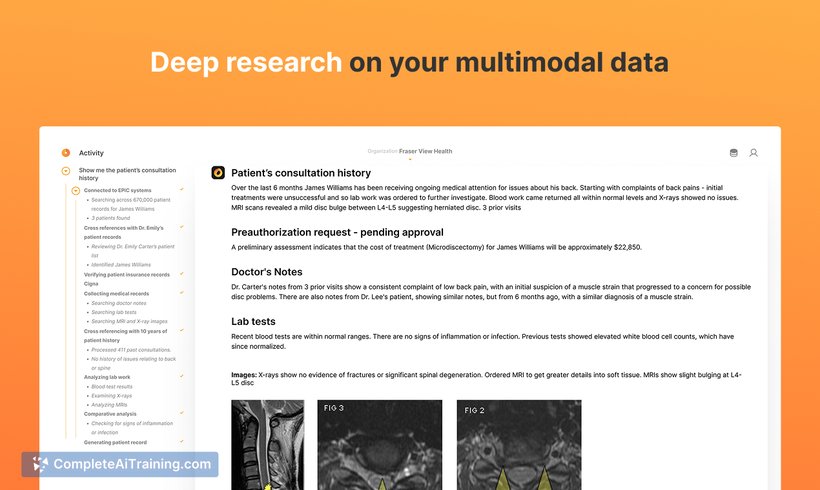

This tool provides a comprehensive environment for LLM prompt engineering by allowing users to run simultaneous experiments on hundreds of examples. It supports evaluation workflows that help identify the best performing prompts and models, while integrating smoothly with existing LLM infrastructures. The platform emphasizes collaboration and transparency in prompt management and application testing.

Key Features

- Concurrent testing of multiple prompt variations and models on extensive datasets.

- Automated live evaluations using LLM-as-a-judge to assess output quality and detect hallucinations.

- Collaborative prompt management with version control and deployment capabilities.

- Comprehensive dashboards displaying metrics such as cost, latency, and quality for informed decision-making.

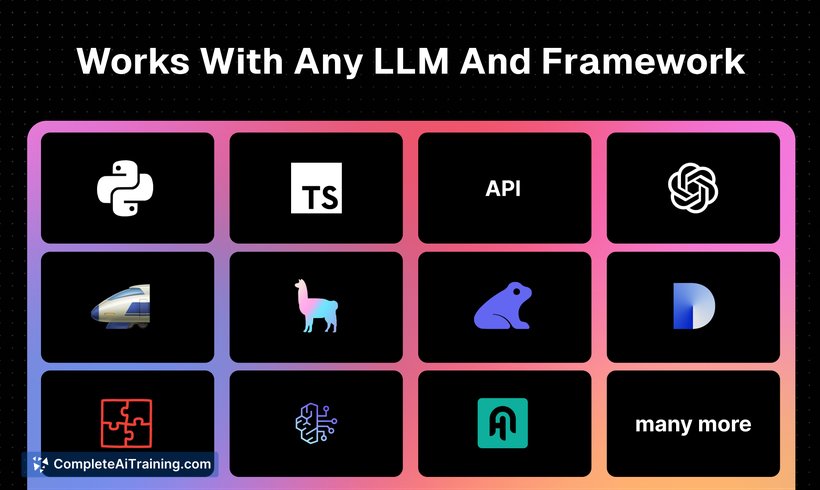

- Support for tracing across multiple programming languages and integration with popular LLM APIs.

Pricing and Value

Langfuse offers a free account option along with a self-hosting alternative, providing flexibility depending on user needs. Being open source, it allows teams to customize and extend the platform without licensing restrictions. This approach offers considerable value for developers seeking a cost-effective yet powerful solution to improve their LLM applications.

Pros

- Open source nature encourages transparency and community-driven enhancements.

- Facilitates rapid iteration with simultaneous prompt and model testing.

- Integrated evaluation tools provide objective quality control.

- Detailed analytics help track performance and optimize resource usage.

- Supports multiple languages and integrates with leading LLM frameworks and APIs.

Cons

- May require technical expertise to set up and self-host effectively.

- User interface could be more intuitive for beginners in prompt engineering.

- Documentation, while comprehensive, might be overwhelming for new users.

Overall, Langfuse Prompt Experiments is well suited for developers and teams focused on building and refining LLM applications with an emphasis on data-driven evaluation. It is especially beneficial for those comfortable with open source tools and looking for a collaborative environment to manage prompt iterations and performance metrics.

Open 'Langfuse Prompt Experiments' Website

Your membership also unlocks: