About LLM Stats

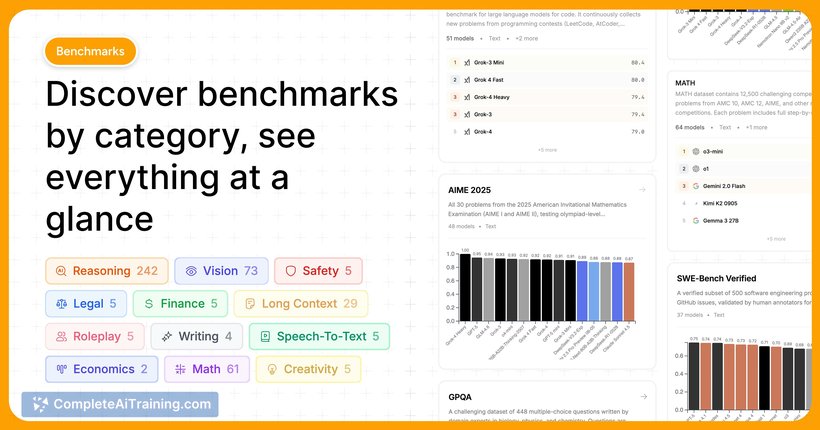

LLM Stats is a comparison tool for large language models that aggregates benchmarks, pricing, and capability information in one place. It provides a playground and an API so users can test prompts and compare results across hundreds of models.

Review

LLM Stats positions itself as a community-first leaderboard for evaluating models by performance, cost and specific task scores like coding or long-context understanding. The interface focuses on side-by-side comparisons and practical metrics such as cost per 1k tokens, which makes it straightforward to judge trade-offs between price and quality.

Key Features

- Model comparison dashboard showing benchmarks, cost, and capability summaries.

- Interactive playground for running prompts against multiple models and observing differences.

- API access to hundreds of models, enabling automated comparisons and integrations.

- Leaderboards and community-driven benchmarking with an emphasis on transparent, reproducible evaluations.

- Cost metrics (e.g., cost per 1k tokens) alongside task-specific scores like coding and long-context performance.

Pricing and Value

The listing indicates a free tier at launch, which provides immediate value for developers and researchers who want to compare models without upfront cost. The combination of benchmark data and cost metrics delivers a clear value proposition for teams evaluating trade-offs between performance and expense. Organizations that need high-volume or private benchmarking may expect paid tiers or enterprise plans for higher quotas and private environments.

Pros

- Consolidates benchmarks and pricing into a single place, reducing time spent researching models.

- Playground plus API makes it easy to test custom prompts and automate comparisons.

- Access to many models at once enables broad apples-to-apples evaluations.

- Community-driven approach and reproducible benchmark goals improve transparency.

- Practical cost metrics (like cost per 1k tokens) help with real-world budgeting decisions.

Cons

- Keeping benchmark data fresh is resource-intensive, so update cadence may vary and affect decision-making.

- Some advanced visualizations and UX polish are still maturing and may be improved over time.

- Users with strict privacy or heavy custom evaluation needs may need dedicated, private infrastructure beyond what a public leaderboard offers.

LLM Stats is well suited for developers, product teams, and researchers who need a quick, evidence-based way to compare models by cost and capability. It is most useful for people making selection decisions or running lightweight experiments; teams that require frequent, large-scale private evaluations should plan for additional tooling or enterprise options.

Open 'LLM Stats' Website

Your membership also unlocks: