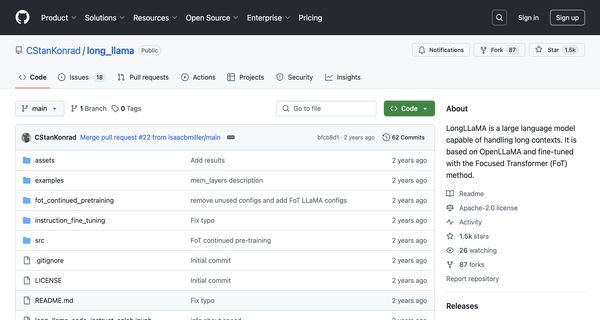

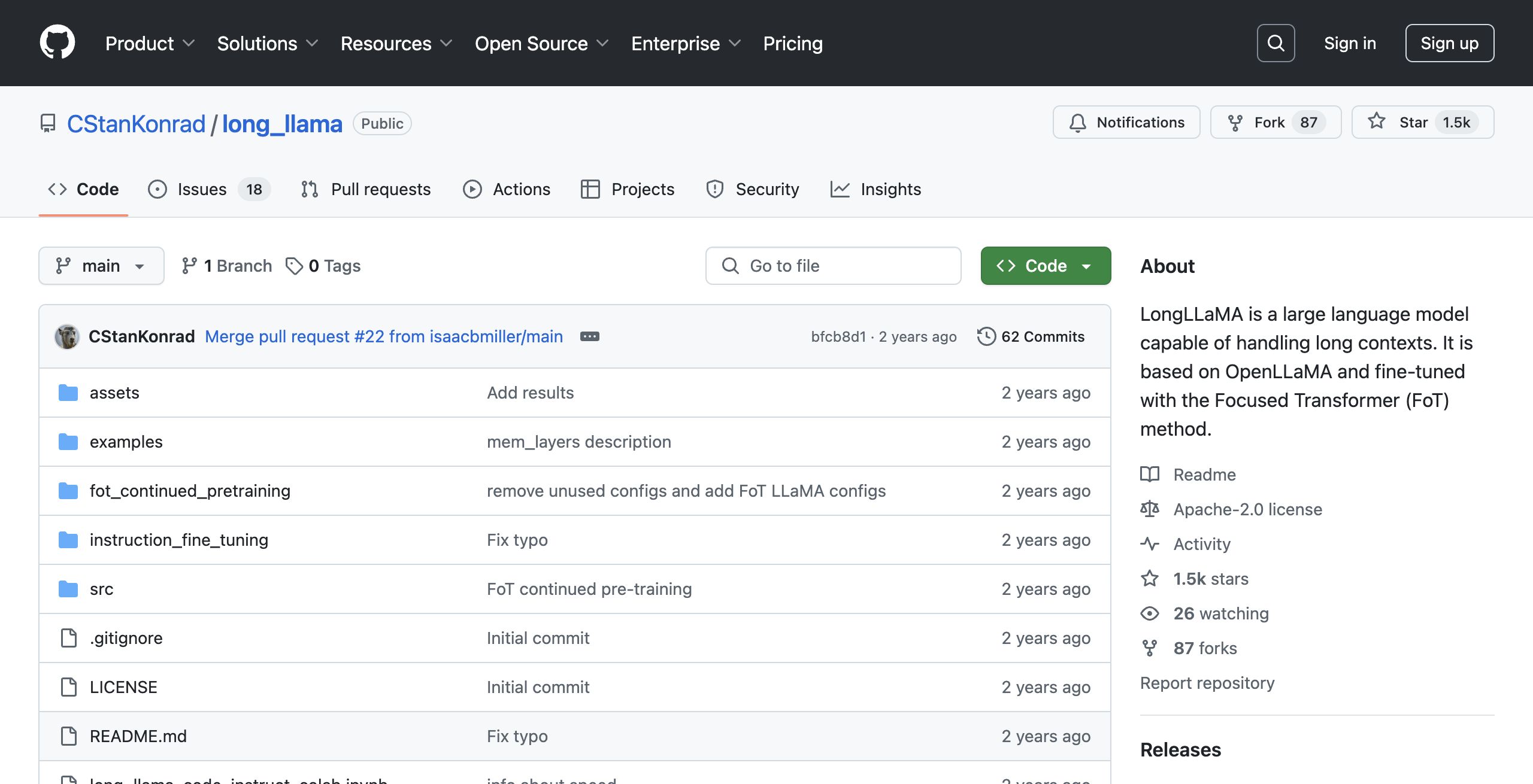

About: LongLLaMa

LongLLaMA is a sophisticated large language model specifically engineered to manage extensive text contexts, boasting the capability to process up to 256,000 tokens. Built on the OpenLLaMA framework, this model has been fine-tuned utilizing the innovative Focused Transformer (FoT) technique, ensuring enhanced performance in understanding and generating long-form content. The repository features a compact 3B base variant of LongLLaMA, released under the Apache 2.0 license, facilitating integration into existing applications. It also includes code for instruction tuning and continued pretraining using FoT, allowing developers to optimize the model for their specific needs. LongLLaMA excels in scenarios that require deep context comprehension, making it ideal for applications such as document summarization, complex question answering, and conversational AI. Its unique ability to handle contexts that surpass its training data sets it apart, providing users with a powerful tool for natural language processing tasks that demand extensive understanding and nuanced responses.

Review: LongLLaMa

Introduction

LongLLaMA is a large language model specially designed to handle extensive text contexts, boasting the ability to process up to 256,000 tokens. Built upon the OpenLLaMA architecture and fine-tuned using the innovative Focused Transformer (FoT) method, LongLLaMA is primarily aimed at researchers, developers, and enterprises that require advanced context handling in natural language processing tasks. This review examines the strengths and intricacies of LongLLaMA and assesses its relevance in the growing field of context-extensible AI models.

Key Features

LongLLaMA stands out in the competitive landscape for its ability to manage exceptionally long input sequences. Key functionalities include:

- Extensive Context Handling: Capable of processing lengthy contexts up to 256,000 tokens, making it ideal for tasks such as passkey retrieval, complex question answering, and document analysis.

- Focused Transformer (FoT) Method: Utilizes a novel training technique where a subset of attention layers accesses a memory cache of (key, value) pairs. This allows the model to extend its effective context length beyond the typical training limits.

- Flexible Integration: Provides inference code compatible with the Hugging Face API, enabling an easy drop-in replacement for existing LLaMA implementations. This makes transitioning to LongLLaMA straightforward for current users.

- Versatile Fine-Tuning Options: Comes with additional code for instruction tuning and continued pretraining, offering customizable training setups using both PyTorch and JAX frameworks.

- Open Source Licensing: The base model variant is available under the Apache 2.0 license, which grants significant freedom for adaptation and distribution in existing projects.

Pros and Cons

- Pros:

- Exceptional context length handling which broadens the scope of applicable NLP tasks.

- Innovative use of the FoT method, which improves the model's capacity to differentiate semantically diverse elements.

- Easy integration with Hugging Face’s ecosystem, ensuring a smooth adoption process.

- Open source availability under a permissive license, fostering community-based improvements and research.

- Cons:

- The advanced features may require significant computational resources, potentially limiting accessibility for small-scale users.

- While the model offers impressive long context understanding, performance on standard short-context tasks remains similar to baseline implementations.

Final Verdict

LongLLaMA is a robust solution for organizations and researchers who need to process extremely long text contexts, making it highly suitable for specialized applications such as document analysis, extensive conversation tracking, and other tasks where context length is critical. While users with limited infrastructure might find the computational requirements challenging, those seeking state-of-the-art long context understanding will greatly benefit from its innovative FoT approach and seamless integration with established frameworks like Hugging Face.

Overall, LongLLaMA is an impressive advancement in language models, poised to offer significant improvements for applications demanding deep and extended contextual comprehension.

Open 'LongLLaMa' Website

Your membership also unlocks: