About MiMo

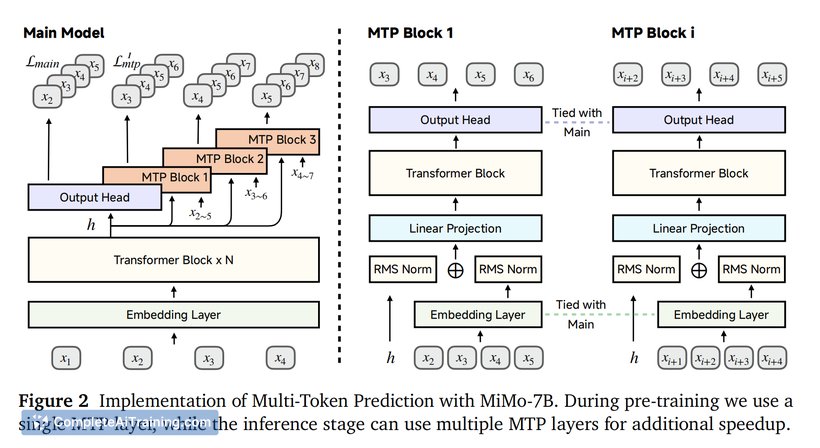

MiMo is an open-source large language model series developed by Xiaomi, focused on enhancing reasoning capabilities. The models are pre-trained and fine-tuned with reinforcement learning techniques to improve performance on tasks involving math and code.

Review

MiMo presents an interesting approach by prioritizing reasoning during its pre-training phase rather than relying solely on post-training adjustments. This strategy results in a language model that competes favorably with other well-known models in specific benchmark tests, especially in mathematical and coding tasks.

Key Features

- Open-source under the Apache 2.0 license, allowing broad accessibility and community contributions.

- Multiple model versions available, including Base, Supervised Fine-Tuned (SFT), and Reinforcement Learning (RL) tuned variants.

- Optimized pre-training on reasoning-dense data sets to enhance logical and analytical abilities.

- Competitive performance on math and coding benchmarks, comparable to established models like o1-mini.

- Available on Hugging Face, facilitating easy integration and experimentation.

Pricing and Value

MiMo is offered completely free as an open-source project, which provides significant value for developers and researchers interested in advanced reasoning models without licensing costs. The availability of multiple fine-tuned versions adds flexibility for various use cases without additional expenses.

Pros

- Free and open-source, encouraging transparency and modification.

- Strong emphasis on reasoning capabilities, improving performance on complex tasks.

- Multiple model versions catering to different needs and preferences.

- Good benchmark results in math and code-related tasks.

- Supported by an active community and hosted on a popular platform for easy access.

Cons

- As a relatively new release, it may have limited documentation and community resources compared to more established models.

- Performance outside of math and coding tasks has not been extensively detailed.

- Users may require some technical expertise to deploy and fine-tune the models effectively.

Overall, MiMo is well-suited for developers, researchers, and enthusiasts interested in exploring language models with a focus on reasoning and analytical tasks. Its open-source nature and promising results make it a strong candidate for projects involving mathematical problem solving or code generation, particularly for those looking for a no-cost, customizable solution.

Open 'MiMo' Website

Your membership also unlocks: