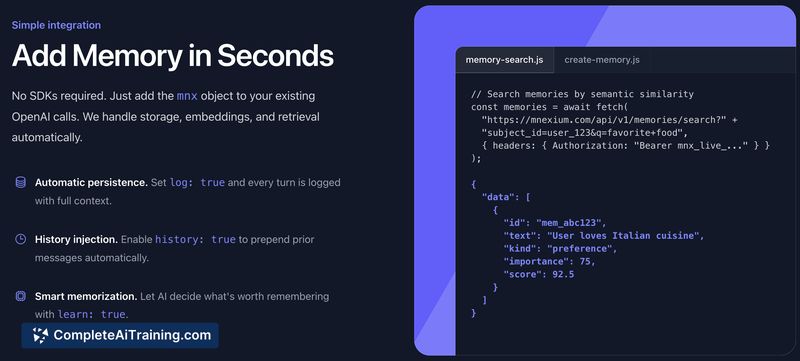

About Mnexium AI

Mnexium AI is an API service that provides persistent, structured memory for language models and AI agents. It stores, scores, and recalls long-term context so applications can include chat history and semantic memories without managing vector databases or retrieval pipelines.

Review

Mnexium AI aims to simplify memory management for AI applications by offering a single mnx object to attach to requests, returning relevant chat history and semantic memories automatically. The approach reduces boilerplate infrastructure and speeds up development of agents, copilots, and AI-powered products.

Key Features

- Persistent memory storage with scoring to prioritize what gets recalled.

- Semantic search and recall that returns the most relevant memories for a new message.

- Single-object API integration (mnx) - no separate vector DBs, pipelines, or retrieval logic required.

- Request-level controls such as recall:true to inject memories and history:true/false to include conversation history.

- Developer resources including a getting-started guide, API docs, and example projects (e.g., a chat clone).

Pricing and Value

At launch there is a free option available. The primary value is reduced development time and complexity by removing the need to build and maintain external memory infrastructure. That said, retrieved memories are injected into the LLM context and therefore consume the model's context window and token budget, which can affect usage costs depending on your provider and workload.

Pros

- Very simple integration model that abstracts away vector DBs and retrieval pipelines.

- Semantic recall improves response relevance by surfacing important past information.

- Flexible controls let you choose when to include memories or full chat history per request.

- Documentation and example code lower the barrier for getting started quickly.

Cons

- Injected memories use the LLM context window, which can increase token usage and costs for some workflows.

- Integration ecosystem is still growing; some platforms or nodes (for example, certain workflow tools) may not yet have native connectors.

- As an early-stage product, advanced features like on-request summarization or expanded integrations may be limited or planned for future releases.

Overall, Mnexium AI is a practical option for developers building agents, copilots, or any AI service that benefits from durable user memory and semantic recall. It is best suited for teams that want to reduce infrastructure overhead and iterate quickly, while being mindful of how injected memories affect token usage and data handling policies.

Open 'Mnexium AI' Website

Your membership also unlocks: