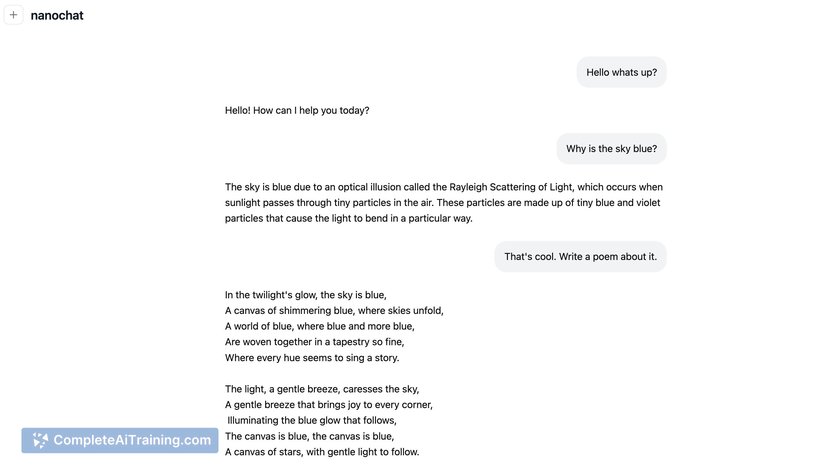

About nanochat

nanochat is a full-stack implementation of an LLM similar to ChatGPT presented in a compact, dependency-light codebase. It provides the components needed to run tokenization, pretraining, finetuning, evaluation, inference, and a web UI on a single multi-GPU node.

Review

nanochat stands out for packing an end-to-end LLM pipeline into a small, readable codebase that favors learning and experimentation. The project is openly available and focuses on making the internals of large-model workflows accessible to developers who want to build and modify components directly.

Key Features

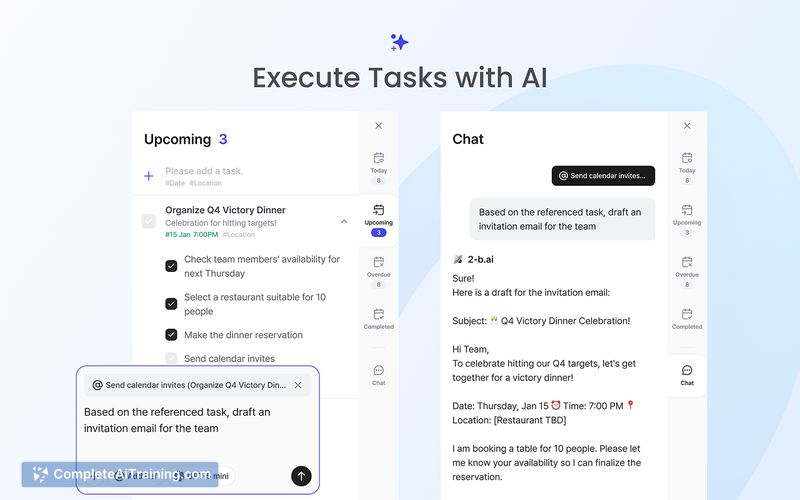

- End-to-end pipeline: tokenization, pretraining, finetuning, evaluation, inference, and a web UI in one project.

- Compact, hackable codebase implemented in a few languages (Python, Rust, HTML, Shell), making it easy to read and modify.

- Single-node execution support targeting an 8× H100 GPU setup for complete workflows.

- Dependency-light and open source, suitable for educational use and rapid experimentation.

Pricing and Value

The codebase itself is available for free as an open source project. The primary costs come from compute: the project is built to run the full pipeline on a single 8× H100 node, and practical experiments such as pretraining or large-scale finetuning require GPU time and associated infrastructure. For developers with access to suitable hardware or cloud credits, the project offers strong value for learning, prototyping, and evaluating model ideas without relying on large external frameworks or managed services.

Pros

- Very concise and readable implementation that makes the full LLM workflow transparent.

- Complete pipeline in one place reduces the friction of stitching tools together.

- Open source and dependency-light, which lowers barriers for modification and study.

- Engineered to run on a single multi-GPU node, enabling end-to-end experiments without massive clusters.

Cons

- Hardware requirements for realistic training or finetuning are significant and can be costly for individuals.

- Not a hosted, turn-key service-requires technical expertise to deploy and operate.

- Focused on education and developer use; production readiness and scaling to many users will need additional tooling.

Overall, nanochat is best suited for developers, researchers, and students who want a compact, hands-on implementation of an LLM pipeline to study, iterate, and prototype. It is less appropriate for users seeking a managed hosted chat service or those without access to high-end GPU resources.

Open 'nanochat' Website

Your membership also unlocks: