About Nexa SDK

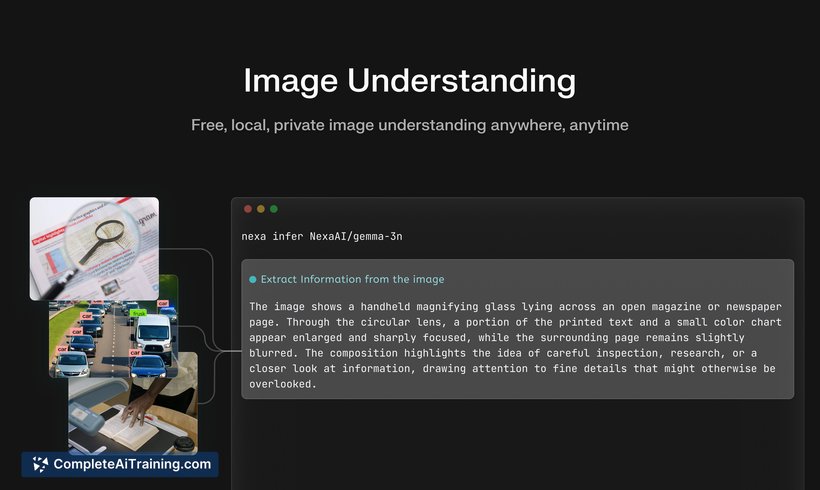

Nexa SDK is an on-device AI development toolkit that enables running multimodal models-text, vision, and audio-locally on CPUs, GPUs, and NPUs. It provides a unified runtime and support for several model formats, with an API intended to simplify integration into existing applications.

Review

Nexa SDK aims to make local model inference more accessible to developers by consolidating backend options and model formats under a single toolkit. The project emphasizes privacy, lower request latency, and flexibility for building applications that do not rely on remote inference endpoints.

Key Features

- Unified runtime supporting CPU, GPU, and NPU backends for local acceleration.

- Multimodal capabilities covering text, vision, and audio workflows.

- Compatibility with multiple model formats and a cloud-style API for easier integration.

- Streaming and function-calling support in the API for interactive or production scenarios.

- Open-source orientation with community-driven development and samples for developers.

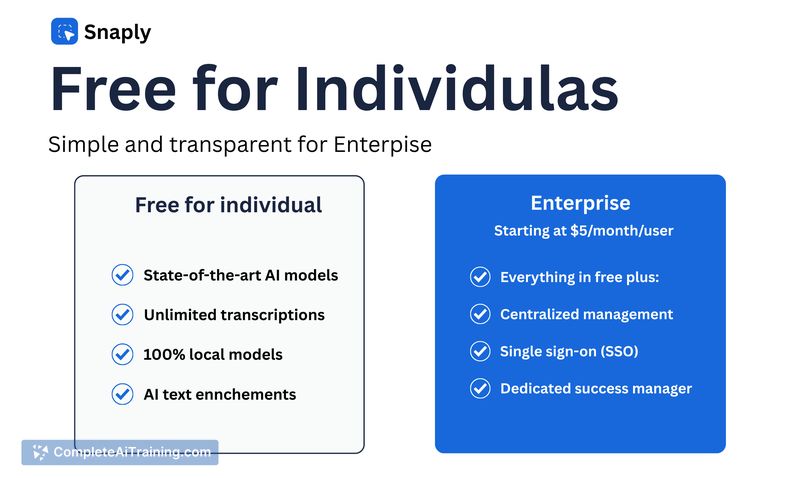

Pricing and Value

Nexa SDK is available with a free, open-source offering that allows developers to experiment and deploy locally without upfront cost. For teams that need commercial support, scaling assistance, or enterprise features, paid options or paid support plans are typically available through vendor channels. The main value proposition is reduced cloud usage fees, improved data privacy by keeping inference on-device, and lower end-to-end latency for user-facing features.

Pros

- Enables on-device inference for improved privacy and reduced operational cost compared with constant cloud calls.

- Broad backend support helps leverage available hardware acceleration on many devices.

- Multimodal support lets teams build combined text, vision, and audio features from one toolkit.

- Open-source nature encourages community contributions and transparency about behavior and performance.

- API patterns compatible with existing cloud-style integrations lower the integration barrier.

Cons

- Hardware-specific optimizations and NPU support can vary by platform, which may require extra engineering effort.

- Mobile native SDKs and some platform integrations are still maturing, so some users may face missing demos or examples.

- Running large models locally may require model conversion or quantization steps that add complexity.

Overall, Nexa SDK is a strong fit for developers and teams who need offline or private inference, want to reduce recurring cloud costs, or need tighter control over performance on endpoint hardware. Teams seeking a fully managed cloud service or those without access to suitable local hardware may prefer a hosted alternative.

Open 'Nexa SDK' Website

Your membership also unlocks: