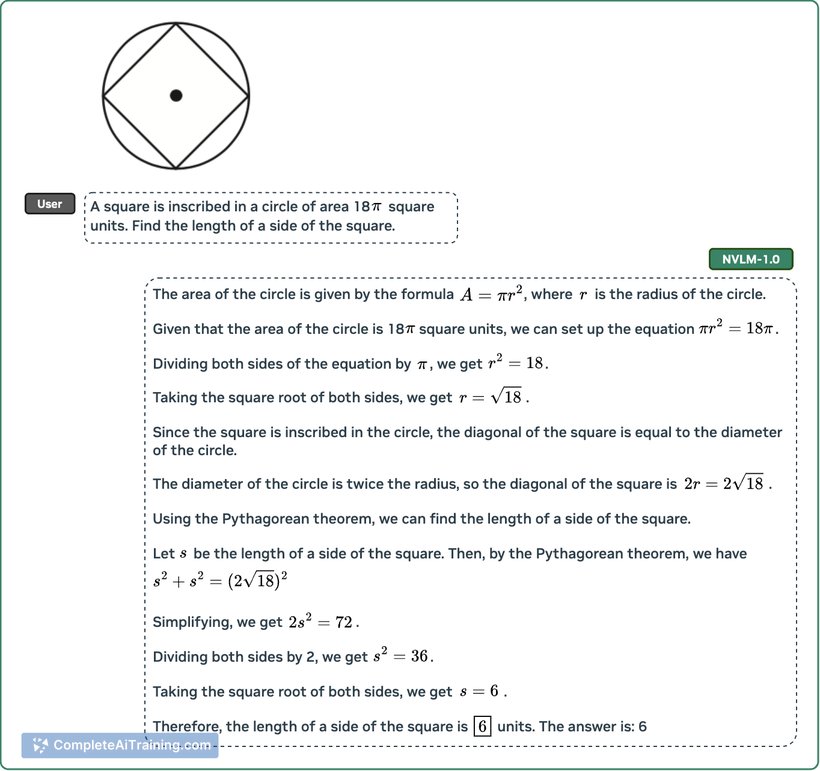

About NVLM 1.0

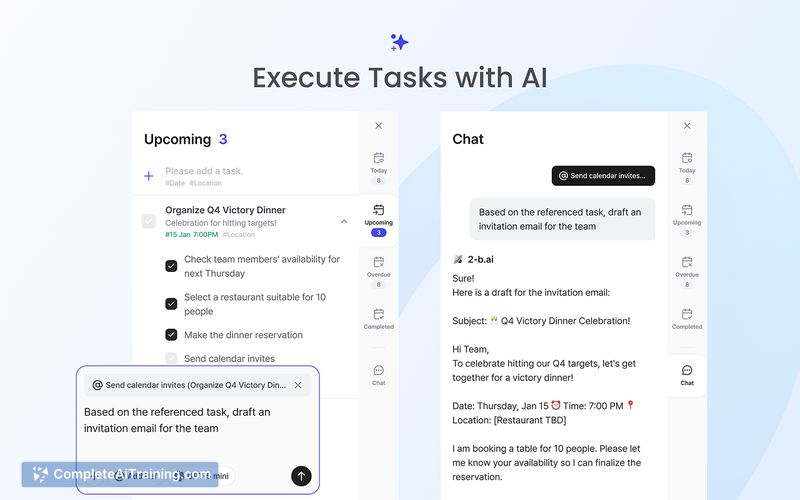

NVLM 1.0 is an open-source multimodal large language model developed by NVIDIA that integrates both vision and language capabilities. It achieves competitive performance on various vision-language tasks, positioning itself alongside leading proprietary and open-access models in this space.

Review

NVLM 1.0 offers a significant contribution to the AI community by providing an accessible, high-performing multimodal model. Its open-source nature allows developers and researchers to explore advanced vision-language applications without the restrictions often associated with proprietary solutions. The model demonstrates strong results across multiple benchmarks, making it a noteworthy option for those interested in multimodal AI.

Key Features

- Multimodal capabilities combining vision and language understanding in a single model.

- State-of-the-art performance on vision-language tasks comparable to leading models like GPT-4o and Llama 3-V.

- Open-source release enabling broad access and community-driven improvements.

- Developed by NVIDIA, leveraging their expertise in AI hardware and software integration.

- Supports a variety of applications requiring the integration of visual context with natural language processing.

Pricing and Value

NVLM 1.0 is available as a free open-source model, which makes it highly accessible for developers, researchers, and organizations without licensing costs. This free availability adds substantial value by lowering the barrier to entry for advanced multimodal AI technology. Users can deploy and customize the model to fit their specific needs without incurring upfront expenses, making it a cost-effective choice compared to proprietary alternatives.

Pros

- Open-source licensing encourages transparency and community collaboration.

- Competitive performance on vision-language benchmarks rivals proprietary models.

- Versatile multimodal design suitable for diverse AI applications.

- Backed by NVIDIA’s expertise and infrastructure.

- No licensing fees, enabling experimentation and deployment at minimal cost.

Cons

- Being a recent release, community support and ecosystem tools are still growing.

- Performance may vary depending on specific use cases and hardware configurations.

- Requires technical knowledge to deploy and fine-tune effectively.

NVLM 1.0 is ideal for developers and researchers seeking an advanced multimodal model with open access, especially those interested in integrating vision and language tasks. It suits projects that require flexibility without licensing constraints and users who have the technical capacity to leverage and customize open-source models. Overall, it represents a promising option for advancing vision-language AI research and applications.

Open 'NVLM 1.0' Website

Your membership also unlocks: