About ReliAPI

ReliAPI is a reliability layer for making LLM and HTTP API calls more predictable and cost-efficient. It sits between your app and providers like OpenAI, Anthropic, and Mistral and handles common failure modes such as duplicate charges, transient errors, and unexpectedly expensive requests.

Review

ReliAPI targets a frequent pain point for teams building on LLMs: wasted spend and brittle retry behavior from direct API use. It provides out-of-the-box features-caching, idempotency, budget caps, retries, and cost tracking-that remove the need to rebuild the same reliability logic in each project.

Key Features

- Smart caching that can cut repeated-call costs by an estimated 50-80% for identical requests.

- Idempotency protection to prevent duplicate charges when requests are retried or users submit twice.

- Budget caps that reject expensive requests before they execute to avoid surprise bills.

- Automatic retries with exponential backoff and a circuit breaker to reduce manual retry logic.

- Real-time cost tracking and LLM-aware handling (token costs, streaming, provider rate limits) across OpenAI, Anthropic, Mistral, and any HTTP API.

Pricing and Value

Public materials highlight free options and a try-before-you-commit path: you can test up to a portion of your requests and there is a 100% refund guarantee for that trial window. Exact paid tiers are not listed in detail, so teams should evaluate expected volume and savings from caching and idempotency when estimating ROI. The primary value comes from preventing wasted spend and reducing engineering time spent building reliability features; for many users those savings will offset subscription costs quickly.

Pros

- Meaningful cost savings for repeated queries via caching.

- Prevents duplicate billing with built-in idempotency checks.

- Saves developer time by providing retries, backoff, and circuit-breaking out of the box.

- LLM-aware features and real-time cost visibility make budgeting easier.

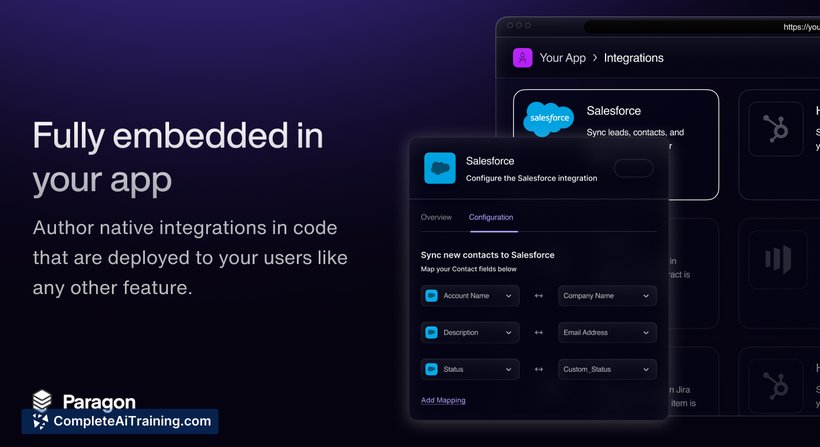

- Simple integration options: swap the endpoint, use SDKs (JavaScript, Python), RapidAPI, or a Docker image.

Cons

- Limited public detail on pricing tiers means teams must test or contact the provider for precise cost planning.

- Introducing an intermediary adds an operational dependency and a possible small latency increase compared with direct API calls.

- As a newly launched product, some advanced features or enterprise-grade integrations may still be evolving.

ReliAPI is a strong fit for development teams and startups that rely on LLMs and want to protect budgets and reduce infra work. It's especially useful for projects that see repeated queries or have risk of accidental duplicate calls; evaluate it initially on a subset of traffic using the trial window to confirm savings and latency impact.

Open 'ReliAPI' Website

Your membership also unlocks: