About Repo Prompt

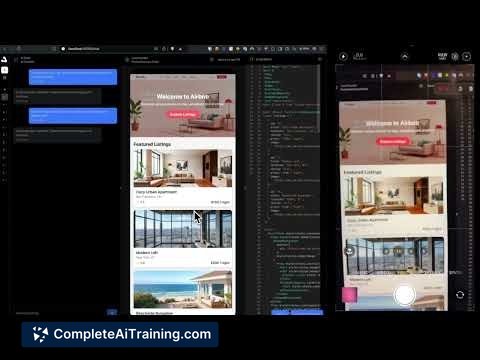

Repo Prompt is a tool that builds precise context from a codebase so large language models can focus on the relevant parts of a project. It analyzes files and functions, extracts the most pertinent snippets, and packs that context to fit within model token limits.

Review

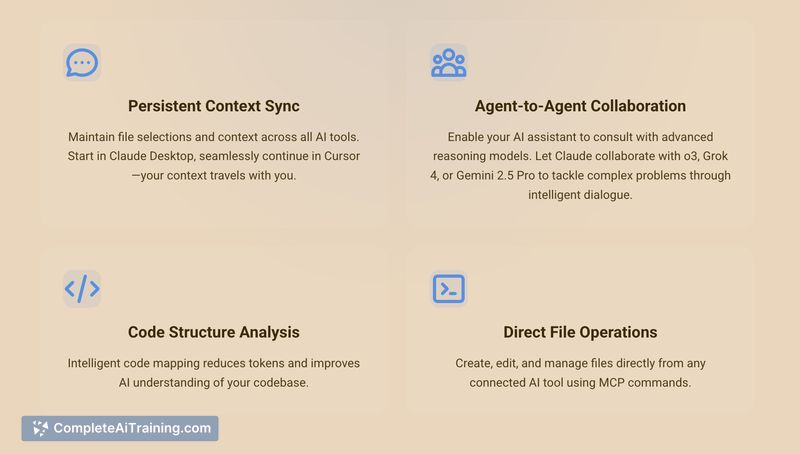

Repo Prompt is focused on making AI-assisted coding workflows more efficient by reducing irrelevant context and token waste. It combines a Context Builder, a command-line interface, and an MCP server component to integrate with popular coding agents and existing LLM subscriptions.

Key Features

- Context Builder that analyzes a repository, isolates relevant files and code sections, and creates a dense context prompt.

- Context packing that trims and prioritizes code so prompts fit within model limits and encourage more reasoning tokens.

- Integration-friendly: works with existing AI subscriptions (no extra API costs from the product itself) and exports to popular coding agents.

- CLI and /rp-build automation to run repo research, generate a plan, and hand off a ready context to an agent.

- MCP server to expose context analysis as a backend for coding agents that lack deep repo discovery on their own.

Pricing and Value

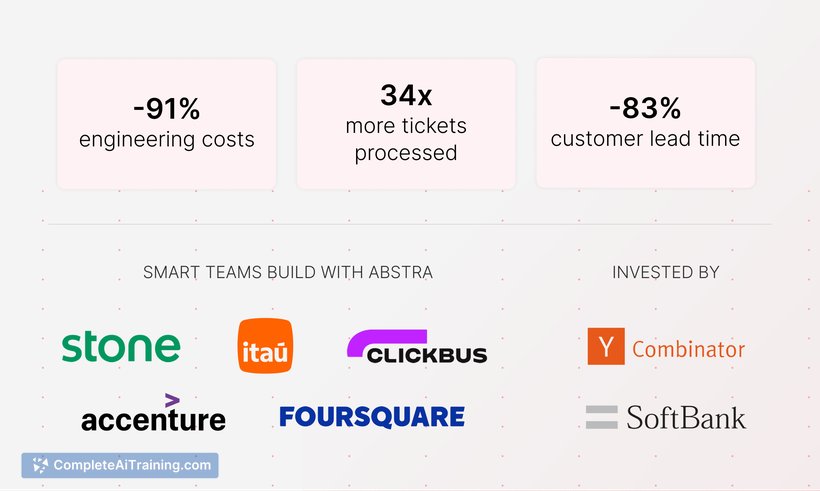

The product page indicates free options are available and emphasizes that Repo Prompt leverages your existing LLM subscriptions so you don't incur additional API charges from the service itself. Advanced features such as MCP server access and CLI-driven automation may be gated behind paid tiers or usage plans-check the official site for up-to-date plan details. The core value proposition is token savings, faster agent responses, and more accurate planning by supplying focused, high-quality context.

Pros

- Reduces token waste by selecting only the most relevant code and sections for a task.

- Fits into existing AI subscriptions and agent workflows without adding separate API costs.

- Automation via CLI and /rp-build speeds repetitive context-preparation tasks.

- MCP server enables deeper integration so coding agents can benefit from repo-aware context.

- Useful for switching across multiple projects or maintaining separate context windows per repo.

Cons

- Setup and CLI/MCP configuration require some technical familiarity and initial effort.

- Relies on external LLM subscriptions for model execution, so those costs remain separate.

- As a newly launched product, some integrations or polish may still be evolving.

Repo Prompt is a good fit for developers and teams who use AI coding agents and want to reduce token usage while improving context quality. It works especially well for projects where targeted planning, code review, or automated agent workflows are part of the development process. For those willing to invest a little setup time, it can streamline how models interact with your codebase.

Open 'Repo Prompt' Website

Your membership also unlocks: