About TensorZero

TensorZero is an open-source platform aimed at building industrial-grade large language model (LLM) applications. It provides a unified API to access multiple LLM providers, along with tools for observability, optimization, evaluation, and experimentation.

Its modular design allows users to adopt components incrementally, making it suitable for diverse AI infrastructure needs.

Review

TensorZero offers a comprehensive stack that brings together essential features required for managing and improving LLM-based applications. By integrating everything from unified API access to feedback-driven optimization, it addresses many common challenges faced by developers working with LLMs.

The platform stands out for its open-source nature and focus on performance, aiming to reduce latency while enabling detailed monitoring and experimentation.

Key Features

- Unified API Gateway: Access multiple LLM providers through a single, high-performance API with latency under 1 millisecond at the 99th percentile.

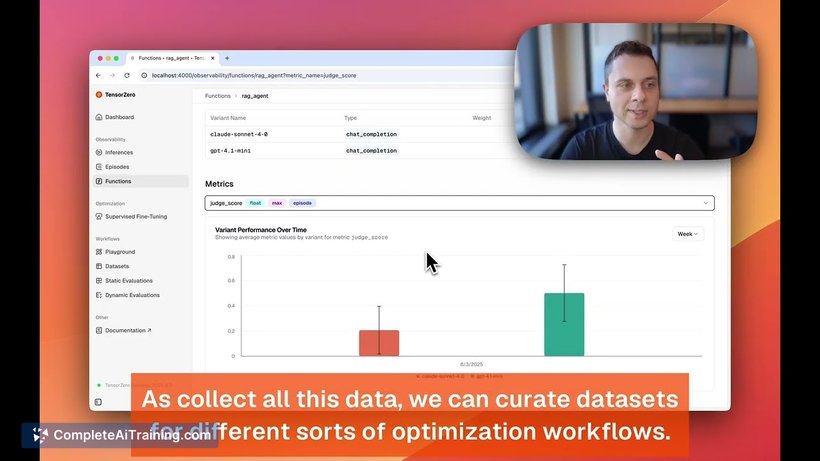

- Observability: Store and retrieve inference data and human feedback programmatically or via a user interface for in-depth monitoring.

- Optimization Tools: Use collected metrics and feedback to fine-tune prompts, models, and inference strategies efficiently.

- Evaluation Framework: Benchmark individual inferences or entire workflows using heuristics and LLM-based judges to assess performance.

- Experimentation Support: Built-in A/B testing, routing, fallbacks, and retries to safely deploy model updates and test variations.

Pricing and Value

TensorZero is offered as a free, open-source solution, making it accessible to developers and organizations without upfront costs. This model allows users to integrate and customize the platform to their specific needs without vendor lock-in or licensing fees.

The value lies in consolidating multiple LLM infrastructure capabilities into one stack, potentially reducing development time and operational complexity. By enabling fine-tuning and experimentation workflows, it can help improve application performance while controlling costs.

Pros

- Open-source and free to use, encouraging community contributions and transparency.

- Unified API simplifies integration with various LLM providers, reducing development overhead.

- Low latency design enhances application responsiveness.

- Comprehensive tooling for observability and optimization supports continuous improvement.

- Built-in experimentation features enable safer deployment of model updates.

Cons

- Being open-source, it may require more technical expertise to set up and maintain compared to commercial turnkey solutions.

- Limited official support options could be a challenge for teams needing dedicated assistance.

- Some advanced features like fine-tuning workflows might require additional configuration and understanding of LLM internals.

Overall, TensorZero is well-suited for developers and organizations looking to build scalable, maintainable LLM applications with a focus on performance and flexibility. It fits teams comfortable with open-source tools who want to implement custom workflows for optimization and experimentation. Enterprises aiming for industrial-grade LLM deployments will find its modular approach and comprehensive feature set valuable for iterating and improving AI-driven products.

Open 'TensorZero' Website

Your membership also unlocks: