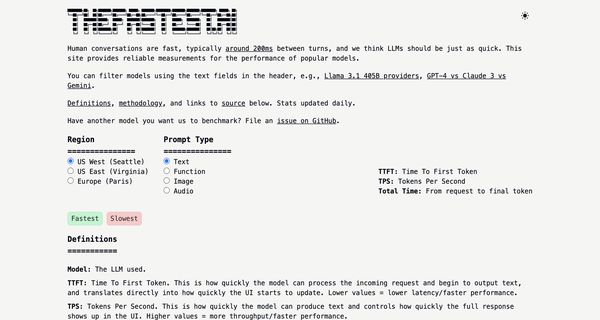

About: TheFastest.ai

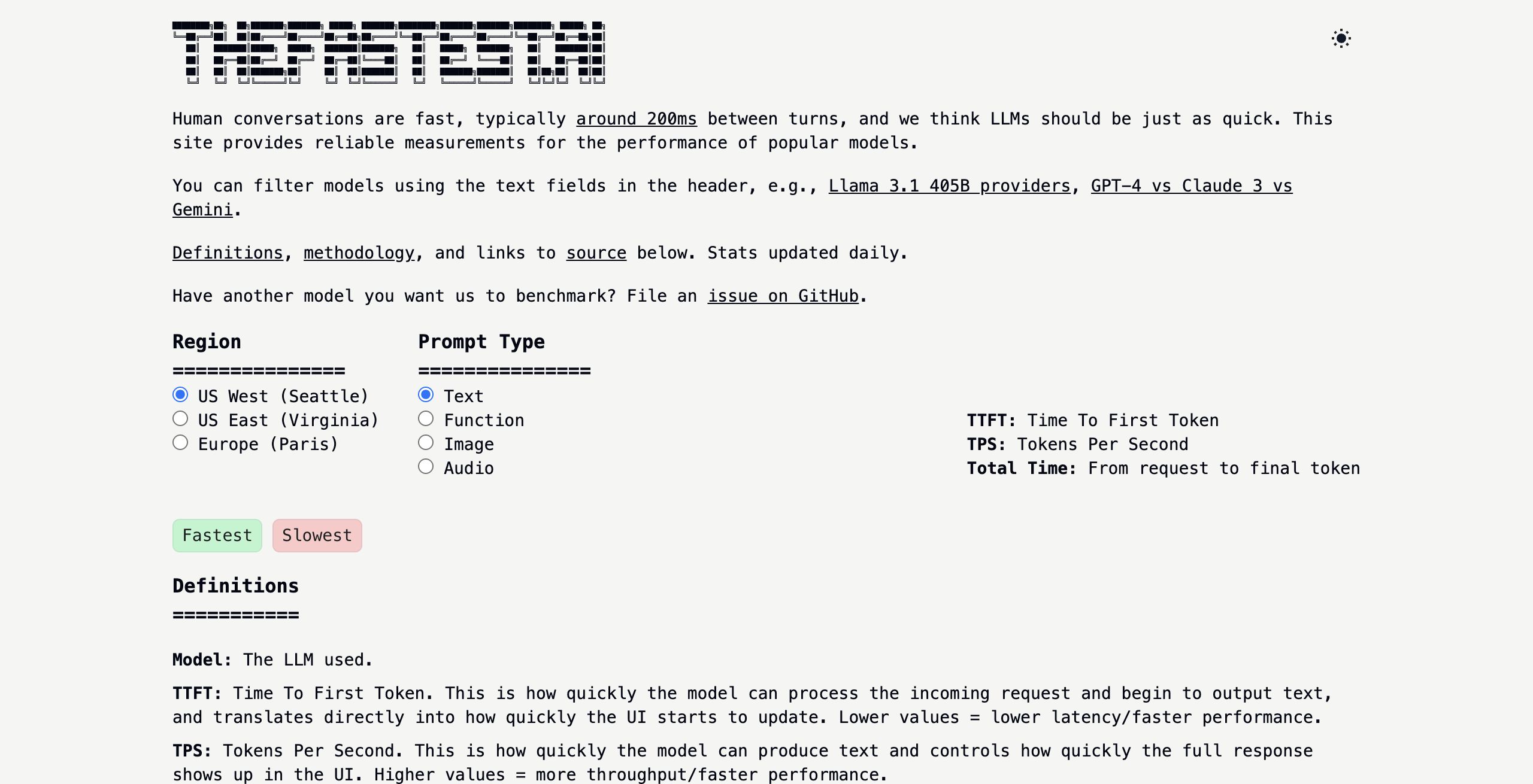

TheFastest.ai is a specialized benchmarking platform tailored for assessing the performance of large language models (LLMs). This tool meticulously evaluates critical metrics such as Time To First Token (TTFT), Tokens Per Second (TPS), and overall response time, providing users with precise insights into the speed and efficiency of different models. With daily updates, TheFastest.ai ensures that developers and businesses have access to the latest performance data, enabling them to fine-tune their conversational AI applications for optimal user engagement.

This platform is particularly beneficial for organizations seeking to select the most suitable LLM for integration into their systems, as it facilitates informed decision-making based on empirical performance data. Additionally, users can track the speed of their chosen models over time, allowing for ongoing optimization and comparison across various models for specific applications or geographical regions. TheFastest.ai stands out for its commitment to delivering transparent and accurate benchmarking, making it an essential resource for enhancing AI-driven interactions.

Review: TheFastest.ai

Introduction

TheFastest.ai is a performance benchmarking tool specifically designed to measure and compare the speed of various large language models (LLMs). Targeted primarily at developers and businesses, the tool focuses on key performance metrics such as Time To First Token (TTFT), Tokens Per Second (TPS), and overall response times, answering a critical need in today’s competitive AI landscape. Given the increasing reliance on fast and efficient conversational AI interactions, TheFastest.ai is being reviewed as a valuable resource for assessing which LLMs can deliver the rapid responsiveness required in modern applications.

Key Features

- Performance Metrics: Measures TTFT, TPS, and total response time to provide a clear overview of model responsiveness and throughput.

- Daily Updated Statistics: Offers regularly updated benchmarks from multiple data centers, ensuring that the performance data is current and reliable.

- Multi-Region Testing: Benchmarks are run from several regions (including US West, US East, and Europe), which helps assess regional latency and performance variability.

- Robust Methodology: Utilizes techniques such as a warmup connection to minimize HTTP setup latency and the "Try 3, Keep 1" approach to filter out outlier results for more accurate measurements.

- User-Friendly Filtering: Provides text fields to filter models according to various criteria, making it easier to compare specific outputs like GPT-4, Claude 3, or Gemini.

- Transparency and Open Source: Source code and methodology details are available on GitHub, promoting transparency and community involvement.

Pros and Cons

- Pros:

- Provides granular benchmarks on key performance indicators crucial for optimizing conversational AI.

- Daily data updates ensure that performance statistics remain relevant and timely.

- Multi-region testing offers a broad view of LLM performance across various geographical locations.

- Robust, transparent methodology increases trust in the data presented.

- Open source elements allow for community validation and potential customization.

- Cons:

- Focuses exclusively on performance metrics; does not evaluate other aspects such as model accuracy or contextual understanding.

- May not provide a comprehensive solution for users looking to integrate full-scale AI management systems.

Final Verdict

TheFastest.ai is an excellent tool for developers, businesses, and technical enthusiasts who prioritize speed and responsiveness in large language models. Its clear focus on performance benchmarking makes it ideal for those looking to make informed decisions about LLM integration based on latency and throughput. However, if your concerns extend beyond performance—such as model accuracy, cost efficiency, or broader AI system integration—you might need to complement it with additional evaluation tools. Overall, TheFastest.ai stands out as a reliable resource to gauge the rapidity of conversational AI, ensuring you can select the model that best meets your specific performance requirements.

Open 'TheFastest.ai' Website

Your membership also unlocks: