About Tinker

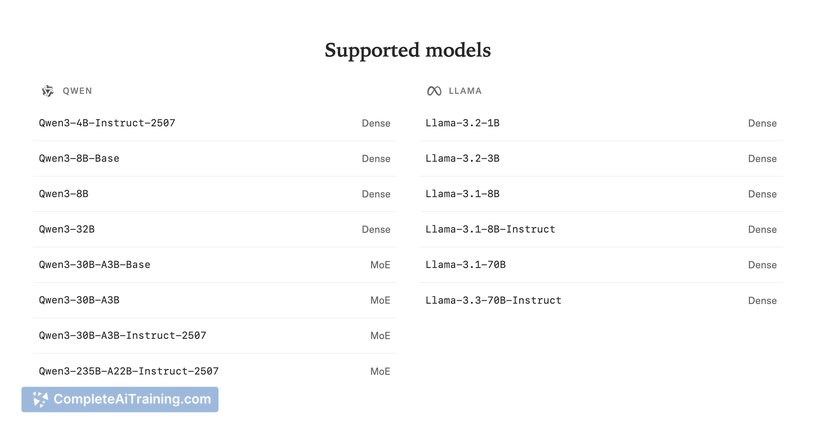

Tinker is an API-focused platform for fine-tuning open-source language models using LoRA. It targets researchers and developers who want close control over training data and algorithms while offloading infrastructure management.

Review

This review covers Tinker's core capabilities, ease of use, and suitability for different user groups. It draws on the product's stated features: an API for fine-tuning, local Python training loops, and execution on distributed GPUs.

Key Features

- API-first fine-tuning workflow with support for LoRA on open-source models

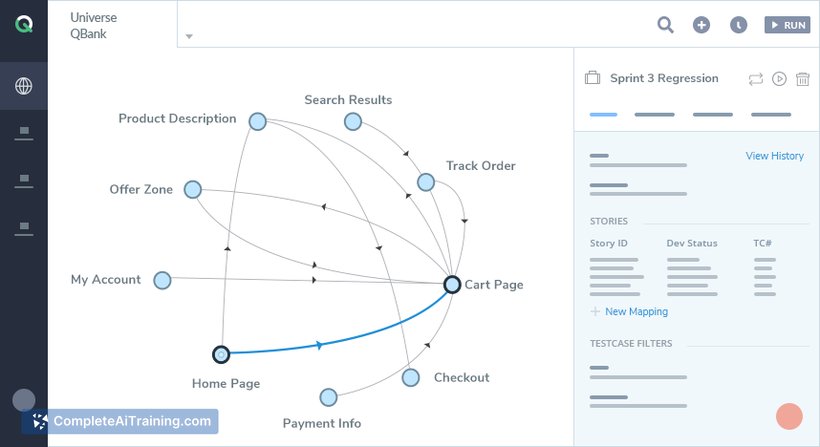

- Write training loops in Python locally and run them on distributed GPU clusters

- Control over data and algorithm choices, keeping model training private to your account

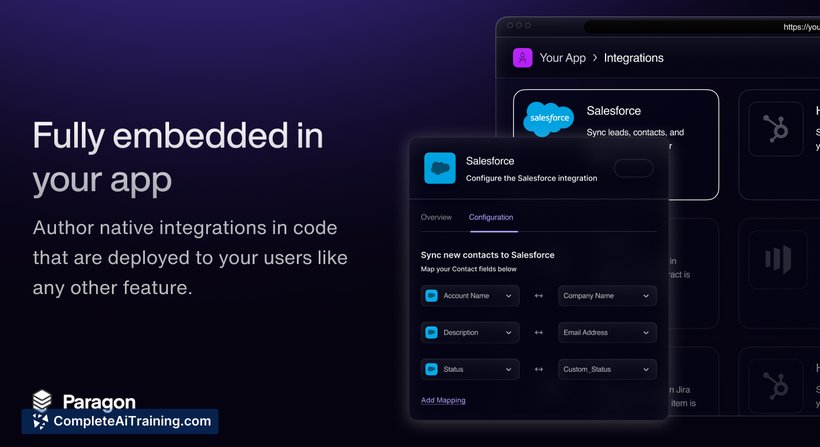

- Infrastructure management handled by the platform so teams don't need to provision their own GPU fleet

- Early access via private beta and a waitlist for broader availability

Pricing and Value

Tinker lists free options alongside paid plans; detailed pricing is driven by compute usage and plan tier. The platform offers value for teams and institutions that want fine-grained control over model training without operating their own GPU infrastructure, though total cost will scale with training volume and GPU time.

Pros

- Flexible API that supports research-style experiments and custom training loops

- LoRA support lets teams fine-tune models more efficiently than full-parameter updates

- Offloads GPU orchestration, reducing the operational burden of distributed training

- Good fit for organizations that require data control and reproducible training pipelines

Cons

- Access is limited in private beta, which may slow onboarding for some teams

- Costs can grow with large-scale training unless optimized carefully

- As an early product, integration docs and ecosystem tools may be less comprehensive than established platforms

Overall, Tinker is best suited for researchers, university groups, and developer teams that need hands-on control of fine-tuning workflows and prefer an API that handles distributed GPU execution. It is less ideal for casual users or teams seeking a fully managed, turn-key model hosting and tuning experience without investing in some ML expertise.

Open 'Tinker' Website

Your membership also unlocks: