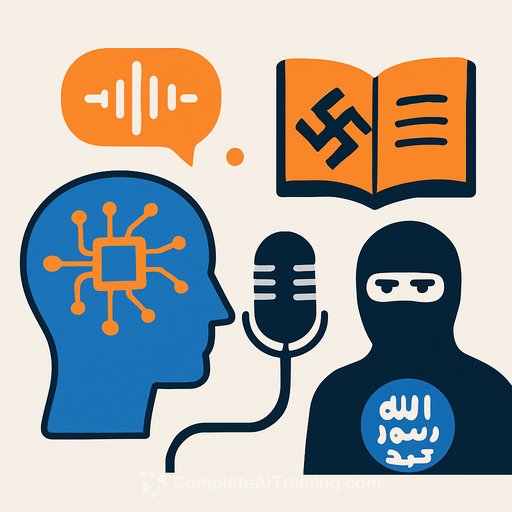

AI voice cloning is supercharging extremist propaganda

Voice cloning and Translation aren't just changing music and marketing. They're giving extremist groups new reach and credibility at a pace that's hard to counter.

"The adoption of AI-enabled translation by terrorists and extremists marks a significant evolution in digital propaganda strategies," said Lucas Webber of Tech Against Terrorism and the Soufan Center. Advanced tools now produce translations that carry tone, emotion, and ideological intensity across languages, removing a key friction that used to slow these networks down.

What's changed: scale, polish, and multilingual reach

On the neo-Nazi far-right, English-language clones of Adolf Hitler's speeches have drawn tens of millions of streams across mainstream platforms. Researchers at the Global Network on Extremism and Technology (GNet) note creators have used voice cloning services, including ElevenLabs, to mimic historical voices in English and other languages.

Accelerationist groups have also turned extremist texts into audio for modern consumption. The book Siege by James Mason was converted into an audiobook using AI to imitate Mason's voice, reinforcing its influence among violent factions linked to groups like the Base and the former Atomwaffen Division.

"The creator of the audiobook has previously released similar AI content; however, Siege has a more notorious history," said Joshua Fisher-Birch of the Counter Extremism Project. He highlighted the book's cult status on the extreme right and its explicit encouragement of lone-actor violence.

Jihadist networks are doing it too

Pro-Islamic State media outlets are using AI to turn text from publications into audio with narration, graphics, and subtitles. That shift turns long-form doctrine into bite-sized, multilingual content well-suited to modern feeds.

In the past, figures like Anwar al-Awlaki recorded English-language lectures to reach western audiences. Now, encrypted channels push AI-generated content and translation at scale, including clips with Japanese subtitles-workflows that used to be far slower and harder to produce.

Beyond audio: imagery, planning, and logistics

Across ideologies, groups have used free generative AI apps-such as ChatGPT-for imagery, research, and process shortcuts. These same networks were early adopters of crypto and file-sharing for 3D-printed weapons, reflecting a broader pattern: move fast on new tech, then flood channels before moderators and law enforcement respond.

Why this matters to PR, communications, IT, and developers

Deepfake voices can impersonate executives, public officials, or community leaders in minutes. A convincing audio clip can trigger a news cycle, move markets, or seed disinformation before it's challenged.

For comms and security teams (see AI for PR & Communications), the issue isn't just authenticity-it's speed. You need fast detection, pre-approved messaging, and clear takedown paths across platforms.

Practical steps for the next 30 days

- PR/Comms: Publish an "Official Statements and Voices" page with verified channels and audio samples. Keep it current and easy to reference in a crisis.

- PR/Comms: Prepare a two-sentence holding statement for audio deepfakes: 1) deny authenticity, 2) point to the verified page, 3) signal ongoing review with platform partners.

- PR/Comms: Stand up continuous monitoring for brand, executive, and product keywords across major platforms and popular mirrors. Log suspected fakes with timestamps and URLs.

- IT/Sec/Dev: Enable content provenance where possible and prioritize vendors that support the C2PA standard. Start adding provenance to your own media today.

- IT/Sec/Dev: Add lightweight audio checks: compare transcripts from multiple ASR engines, run speaker verification against known samples, and flag improbable channel/noise profiles.

- IT/Sec/Dev: Lock down internal TTS/cloning: require approval for custom voices, whitelist datasets, log prompts/outputs, and block fine-tunes on sensitive voices.

- Cross-functional: Pre-register platform reporting workflows, DM contacts, and escalation paths for faster takedowns. Align on legal review templates.

Policy moves to complete this quarter

- Acceptable use policy: Define how staff may use AI audio, translation, and image tools. Include red lines and examples.

- Vendor due diligence: Ask about watermarking, provenance, abuse response times, and law-enforcement cooperation.

- Training: Run short sessions on spotting synthetic media and handling hoaxes. For role-based learning, see curated options by job function at Complete AI Training.

What to watch

- Voice cloning-as-a-service offerings that remove friction and cost.

- Translation + TTS pipelines that localize propaganda at scale.

- Community-built audiobooks of historical extremist texts.

- Short-form clips with synced subtitles optimized for virality.

Key takeaways

Extremist groups are using generative tools to upgrade distribution, credibility, and reach. The same tools your teams use for productivity are being repurposed to create convincing fakes and multilingual content.

Close the gap with simple, fast defenses: provenance on your media, clear authority signals, lightweight detection checks, and pre-agreed crisis workflows. Small moves now prevent big headaches later.

Sources and further reading

- Global Network on Extremism and Technology: Research on extremist use of emerging tech

- Coalition for Content Provenance and Authenticity: Open standard for media authenticity

Your membership also unlocks: