Human Oversight: The Missing Link in AI-Driven Marketing

AI now sits inside audience segmentation, creative testing, and media optimisation. It speeds up execution. But marketing is still human to human. Without oversight, AI can amplify bias, erode trust, and disconnect brands from the people they serve.

The goal isn't to slow progress. It's to keep judgement, creativity, and empathy in the loop-so your brand scales without losing its edge.

Keep marketing rooted in trust

Ethics should sit at the centre of your AI plans. AI processes data at scale, but it does not understand culture or context. Biased data leads to biased outputs-fast. When the stakes are high (regulatory, reputational, or ethical), a human-in-the-loop is a non-negotiable safeguard, much like good GDPR practice.

Think food safety: many people don't know the risks in reheating cooked rice. Algorithmic bias is similar-often invisible until harm shows up. Awareness, education, and process guardrails prevent bad outcomes.

The risks are already visible: unrepresentative training data has produced stereotypical visuals and reinforced outdated targeting. Marketing must reflect diverse audiences to be effective. Regular audits, curated datasets, and ongoing human intervention are essential to catch and correct bias before it hits your customers.

Trust is fragile. A study from PrivacyEngine surveying 1.3 million people found 60% believe companies misuse their data. People expect clarity about how their data is used. Oversight isn't a brake-it's how you build fair, sustainable systems customers will accept.

Build ethics into every AI strategy

Start with transparency: what you collect, why you collect it, and how decisions are made. Secure informed consent. Set boundaries on what can be automated. Keep a human touch at key moments where empathy, context, or nuance matter.

Governance gives confidence. Put monitoring in place, run ethical audits, and require explainability. Recent UK data legislation has updated cookie consent rules, adjusted automated decision-making restrictions, and formalised complaints processes-creating both fresh opportunities and new responsibilities for marketing teams.

Offer clear opt-in and opt-out choices. Explain complex models in plain language. Brands that are open earn equity; brands that are vague lose it. For practical guidance on lawful data use, see the UK ICO's GDPR resources here.

This is a creative question as much as a technical one. AI can accelerate iterations, but it can't replicate imagination or strategic taste. Treat AI outputs like any creative draft: stress-test for authenticity, emotion, and alignment with brand purpose.

Balance automation with judgement

Oversight isn't overhead; it's how you get the best from AI. Over-automation risks generic work and a slow loss of team capability. Draw a clear line: delegate routine tasks (FAQs, summarising surveys) to AI; keep high-stakes, emotionally sensitive, and brand-defining decisions under human control.

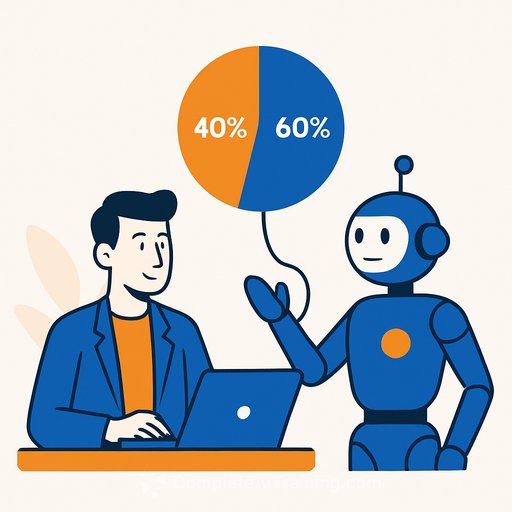

A practical rule of thumb: aim for something like 60% human-led stewardship (purpose, positioning, emotional intelligence) and 40% AI-driven activation (scale, speed, optimisation). Adjust by risk and customer impact.

Human expertise is vital when interpreting model outputs. Data without context misleads. AI can surface patterns; people connect those patterns to psychology, culture, and behaviour. Double down on the skills AI lacks: value-based judgement, strategy, empathy, and reducing bias in outcomes.

Skill up your team

AI literacy and ethics are now core skills for everyone in marketing-not just specialists. Curiosity still wins. Invest in training that blends practical tools with governance, bias awareness, and measurement.

If you're building capability, explore focused upskilling paths for marketing teams here.

Operational checklist: put human oversight to work

- Map AI use by risk and customer impact; assign human gatekeepers for high-risk flows.

- Define red lines: decisions that must remain human (pricing exceptions, vulnerable customers, brand tone for sensitive topics).

- Curate training data: document sources, check representativeness, remove sensitive proxies, and track versioning.

- Audit regularly: bias tests across segments, performance drift checks, and manual spot reviews of outputs.

- Require explainability: short, plain-language rationales for significant automated suggestions or decisions.

- Upgrade consent and preferences: clear opt-in/out, refreshed cookie notices, and easy preference management.

- Stand up governance: an ethics review cadence, issue logging, and RACI for approvals.

- Train your team: AI literacy, prompt quality, bias mitigation, and incident response drills.

- Track trust KPIs: complaint rates, opt-out trends, sentiment by segment, and creative inclusivity scores.

Looking ahead

AI will increase the speed, scale, and precision of our work. The marketer's role doesn't shrink-it shifts. AI suggests; humans decide. Define the right questions, design systems with insight, and keep models fit for purpose. Without clear direction, AI can drift from what your brand stands for.

While we operate in a narrow-AI reality, human oversight should stay tightly integrated. AI can accelerate performance; humans preserve purpose. Brands that over-automate lose nuance. Brands that ignore automation fall behind. The answer is balance.

Commit to a human-first approach: embed oversight, invest in ethics, and keep brand purpose at the core. For structured learning paths across tools and roles, see current course options here.

Your membership also unlocks: