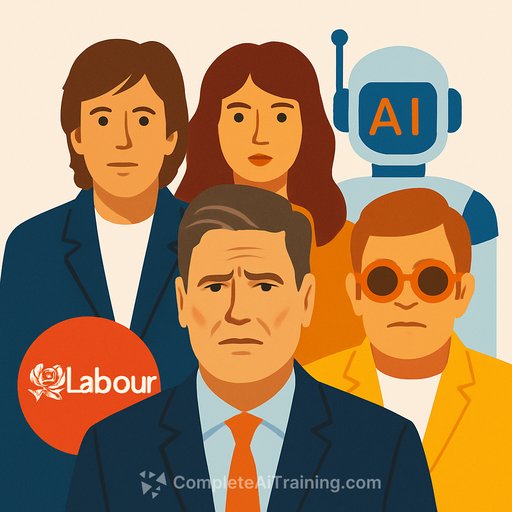

UK artists press Starmer to protect creative rights as AI pact with US nears

Some of the UK's biggest names - Paul McCartney, Kate Bush, Mick Jagger, Elton John and more than 70 others - have urged the prime minister to defend creators' rights ahead of a UK-US tech deal expected during Donald Trump's state visit. Their message is simple: stop AI firms from using copyrighted work without consent or transparency.

In a letter to government, the signatories argue ministers blocked attempts to require AI companies to disclose the copyrighted material used to train their models. They say this failure undermines basic rights set out in international and European human rights frameworks.

What's happening

The letter says copyright is being breached at scale to build AI systems. It criticises the government's refusal to accept amendments to the recent data (use and access) bill that would have forced AI firms to reveal training sources.

Elton John warned that proposals allowing AI training on protected work without permission "leave the door wide open for an artist's life work to be stolen". He added: "We will not accept this."

Other signatories include Annie Lennox, Antonia Fraser and Kwame Kwei-Armah. Creative bodies backing the letter span the News Media Association, Society of London Theatre & UK Theatre, and Mumsnet.

Government previously floated an approach letting AI companies use copyrighted work unless rightsholders opt out. It now says that is no longer the preferred option and has set up working groups with creative and AI representatives - including US firms such as OpenAI and Meta - to find a path forward.

A government spokesperson said concerns are being taken seriously and an impact report will be published by the end of March next year. The aim, they said, is to support rights holders while enabling training of AI models on high-quality material in the UK.

The rights case the letter leans on

The artists cite multiple frameworks: the European Convention on Human Rights provision against deprivation of possessions without public interest, the Berne Convention's protection of authors' works, and the ICESCR's guarantee of "moral and material interests" for creators.

- European Convention on Human Rights

- International Covenant on Economic, Social and Cultural Rights (ICESCR)

Why this matters for creatives

Training without consent erodes licensing income, weakens attribution, and blurs ownership of derivative outputs. Lack of transparency makes it hard to enforce existing rights or negotiate fair terms.

If a UK-US pact sets permissive norms, it could influence contracts, platform policies, and future case law. Decisions made now will shape how your back catalogue and future work are valued - or extracted - by AI companies.

What you can do now

- Put it in your contracts: add explicit clauses prohibiting AI training on your work without written consent, audit rights, and meaningful remedies for breach.

- Set clear licensing terms on your site and portfolios; include "no AI training" language and machine-readable signals where available.

- Register your works and keep dated source files. Documentation strengthens enforcement and negotiation.

- Use content credentials/watermarking where practical to assert authorship and track provenance across platforms.

- Monitor for misuse and be ready to file takedowns or complaints. Keep templates for notices to platforms and AI vendors.

- Join and engage with your trade bodies. Collective pressure is moving policy; align your asks around consent, transparency, and fair compensation.

- When adopting AI tools, prefer vendors offering transparency on training data, opt-out options, and legal indemnities for enterprise use.

What to watch next

- Announcements tied to the UK-US tech pact during the state visit, especially any AI training provisions or disclosure requirements.

- The government's promised impact report due by end of March next year - and whether it leads to enforceable transparency and consent.

- Output from the cross-sector working groups, including whether creator voices outweigh large US platform interests.

Upskilling to protect your position

AI will be in your clients' toolchains regardless of policy outcomes. Build fluency so you can set terms, price usage, and protect your catalogue while benefitting from the parts that serve you.

- Curated AI courses by job to align tools and policy with your practice.

Bottom line: push for consent, demand transparency, and keep your contracts tight. Policy is in motion; your leverage is strongest when you act collectively and document everything.

Your membership also unlocks: