Inside MIT's CRESt: An AI Copilot for Experimental Science

Most AI tools in R&D are narrow. They tune parameters inside small datasets. Scientists don't. They pull from papers, prior results, images, chemical intuition, and conversations. MIT's Copilot for Real-world Experimental Scientists (CRESt) aims to meet that standard by fusing multimodal inputs and driving end-to-end experiments.

CRESt learns from literature, lab data, and visual feedback. It plans experiments, runs them with automation, watches what happens, and iterates. The goal isn't to replace scientists, but to act like a colleague who never gets tired and never stops learning.

What makes CRESt different

- Multimodal reasoning: Combines published results, experimental logs, chemical composition, and images to inform each decision.

- Active learning plus: Goes beyond standard active learning by continuously integrating new modalities and human feedback.

- Autonomous execution: Uses robots to synthesize, characterize structure, and test performance, closing the loop without waiting for manual handoffs.

- Natural language interface: Researchers can ask for image reviews, propose material recipes, or request analyses; CRESt explains choices and hypotheses.

How the loop runs

- Ask: A researcher frames a goal or question.

- Plan: CRESt searches prior literature and its data to propose experiments with rationale.

- Execute: Automated tools run synthesis, structural checks, and performance tests.

- Observe: Vision-language models watch for errors in real time and flag issues.

- Learn: Results feed the next cycle, refining both the model and the experiment queue.

Reproducibility built in

Reproducibility drifts when small lab errors go unnoticed. CRESt monitors experiments with cameras and vision-language models, catching subtle mistakes and suggesting fixes. This reduces variance and increases trust in the data you bank future decisions on.

Beyond narrow Bayesian optimization

Basic Bayesian optimization often gets stuck tuning what's already known. CRESt sidesteps that trap by merging priors from literature, structural images, and fresh measurements, then exploring broader spaces with intent. The result is wider search with fewer dead ends.

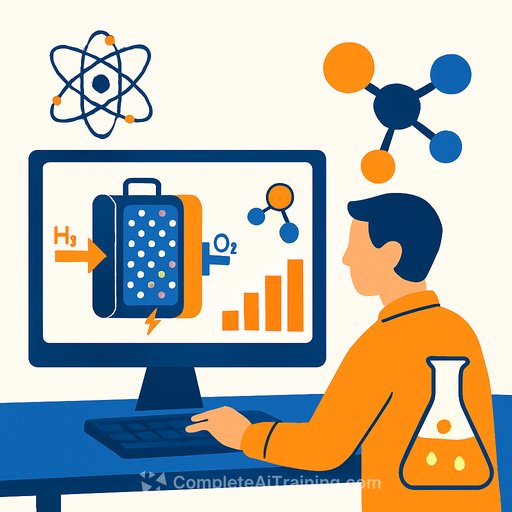

Case study: fuel-cell catalysts

Fuel cells are a large search space with expensive materials and slow progress. The team used CRESt to merge published papers, chemical recipes, structural images, and live electrochemical data. Over three months, the system evaluated 900+ chemistries and ran 3,500 electrochemical trials.

The outcome: a multielement catalyst that used less palladium yet delivered record performance. "We used a multielement catalyst that also incorporates many other cheap elements to create the optimal coordination environment for catalytic activity and resistance to poisoning species such as carbon monoxide and adsorbed hydrogen atom," says Zhang. The approach made low-cost options feasible where manual search stalled for years.

Human in the loop, by design

"In the field of AI for science, the key is designing new experiments," says Ju Li, School of Engineering Carl Richard Soderberg Professor of Power Engineering. "We use multimodal feedback - for example information from previous literature on how palladium behaved in fuel cells at this temperature, and human feedback - to complement experimental data and design new experiments. We also use robots to synthesize and characterize the material's structure and to test performance."

Li adds: "CREST is an assistant, not a replacement, for human researchers. Human researchers are still indispensable. In fact, we use natural language so the system can explain what it is doing and present observations and hypotheses. But this is a step toward more flexible, self-driving labs."

What this means for your lab

- Start with data plumbing: Centralize prior runs, instrument logs, and image archives. Label outcomes and failure modes.

- Pick a high-iteration target: A workflow with short experiment cycles (e.g., electrochemistry, thin-film screening) shows value fast.

- Wire up automation: Even partial automation (sample handling, imaging, test scripts) enables reliable closed loops.

- Add human checkpoints: Require explanations for proposed experiments. Keep a record of accepted, modified, and rejected plans.

- Measure the loop: Track time-to-new-hypothesis, unique chemistries explored, and reproducibility metrics - not just best score.

Read the study and continue learning

The full study was published in Nature. For methodology details and validation, see Nature's journal site: nature.com.

If you're building AI fluency for research teams, explore practical course paths by job role: Complete AI Training - Courses by Job.

Your membership also unlocks: