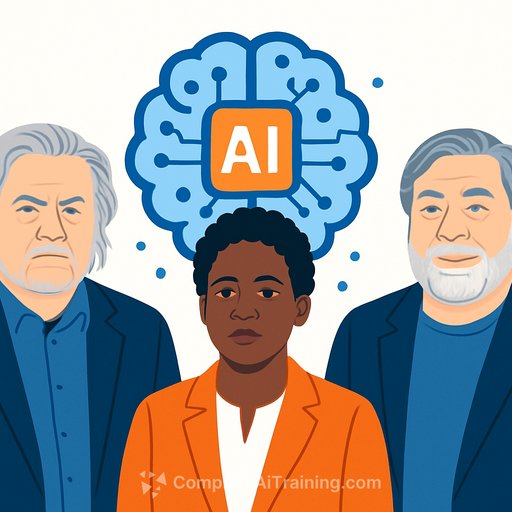

Bannon, Tech Icons Back Call to Ban Superintelligent AI - What It Means for IT and Dev Teams

A surprising alliance of right-wing media figures and leading technologists is urging a ban on developing superintelligent AI. The statement, coordinated by the Future of Life Institute (FLI), argues that capability is outpacing governance and that a pause is needed until safety and accountability are proven.

Signatories include Steve Bannon and Glenn Beck alongside AI pioneers Geoffrey Hinton and Yoshua Bengio, with tech leaders like Steve Wozniak, Richard Branson, and Mary Robinson. FLI, founded in 2014 with early support from Elon Musk and Jaan Tallinn, framed the appeal as a moral line: halt progress beyond human control "until the public demands it and science finds a safe path forward."

Why this matters now

This is bigger than politics. The coalition highlights a shared concern across ideologies: superintelligent systems could create risks we can't contain. Meanwhile, U.S. officials and industry voices warn that bans could undercut innovation and competitiveness, favoring targeted regulation instead.

Policy debates are heating up under the Trump administration, where several officials have close ties to Silicon Valley. Expect more pressure on companies building large models, and more scrutiny from boards, CISOs, and regulators on how AI is developed, deployed, and monitored.

Signals from each camp

- FLI: Calls for a pause on systems that exceed human control until safety is verifiable and democratically accepted.

- Tech leaders (Hinton, Bengio, Wozniak): Warn that superintelligence may be uncontrollable if pushed too far, too fast.

- Right-wing figures (Bannon, Beck): Tie AI risk to national security and skepticism of big tech influence.

- AI industry and U.S. officials: Prefer regulation over prohibition, arguing for guardrails that preserve innovation.

Practical to-dos for engineering and IT

Regardless of where policy lands, teams can reduce risk now. Treat this as a capability and safety program, not a PR exercise.

- Gate high-risk capabilities: Run structured evals for autonomy, code execution, bio/chem assistance, and deception. Require human approval for risky tools or actions.

- Compute governance: Track training and inference budgets; flag runs above defined thresholds for review. Keep immutable logs.

- Sandbox tool use: Isolate model tools in containers, restrict filesystem and network, use ephemeral credentials, and enforce rate limits.

- Least-privilege by default: Separate environments for experimentation vs. production. Block raw internet and system calls without controlled proxies and auditing.

- Red-team and adversarial testing: Continuously probe for jailbreaks, data exfiltration, prompt injection, and covert channel behavior. Patch and retest.

- Model change management: Keep model cards with capabilities, limits, and eval results. Require approvals to promote models across stages.

- Incident response for AI: Define triggers, a kill switch, rollback plans, and post-mortems for model misbehavior or misuse.

- Vendor due diligence: Ask for evals, safety reports, and audit attestations. Add contractual terms for misuse response and model withdrawal.

- Data hygiene: Minimize PII, track provenance, and honor opt-outs. Strip sensitive data before training or retrieval indexing.

- Governance and training: Stand up an AI review board with authority to block launches. Train developers and SREs on safety controls and threat models.

What to watch next

The FLI appeal could revive talk of moratoriums or "frontier model" pauses. Without government backing, impact is symbolic for now-but symbols move roadmaps when boards and regulators pay attention.

Expect action in Washington, Brussels, and major tech hubs on topics like compute thresholds, incident reporting, eval standards, and export controls. The bigger question lingering over all of this: who sets the limits on systems that could surpass human oversight?

Resources

If your team needs structured upskilling on safe AI development and deployment, see our AI Certification for Coding.

With information from Reuters.

Your membership also unlocks: