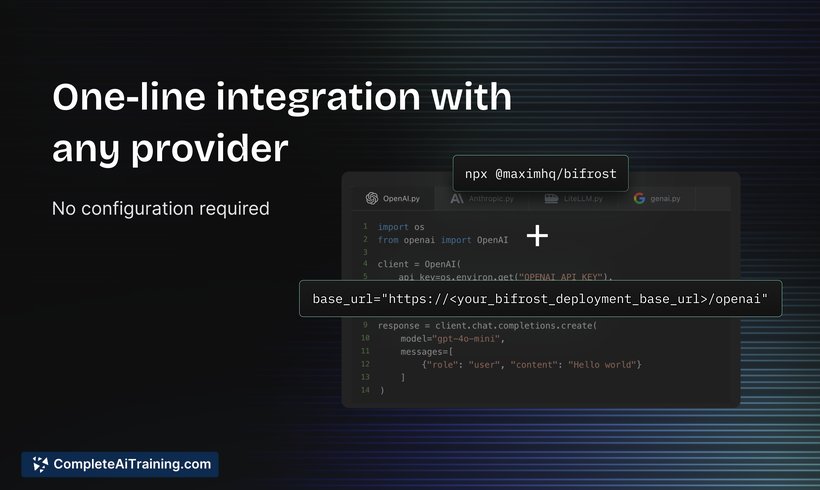

About Bifrost

Bifrost is an open-source large language model (LLM) gateway designed to provide high-speed access and efficient management of AI models. Built with a focus on performance and scalability, it supports integration with over a thousand models through a single API, making it a versatile tool for AI developers.

Review

Bifrost offers a streamlined and fast approach to connecting with multiple LLM providers, emphasizing speed and ease of use. Its architecture prioritizes lightweight design and extensibility, allowing developers to customize and extend functionality through plugins without sacrificing performance. This makes it a practical choice for teams looking for a reliable and scalable LLM gateway solution.

Key Features

- Extremely fast setup and operation, with less than 30 seconds to get started and minimal overhead at high request rates.

- Plugin-first architecture that simplifies the addition of custom features without complex callback structures.

- Built-in support for Model Context Protocol (MCP) enabling seamless external tool integrations and executions.

- Comprehensive governance controls including API key rotation and weighted distribution for managing multi-team environments.

- Compatibility with a wide range of transport protocols including HTTP and planned support for gRPC.

Pricing and Value

Bifrost is offered as a free, fully open-source tool, which makes it accessible to developers and organizations of all sizes. Its open-source nature encourages community contributions and transparency, while the integration with additional platforms provides extended value for those seeking end-to-end AI lifecycle management. This combination of no-cost access and high performance offers strong value for teams needing a scalable LLM gateway without licensing fees.

Pros

- Impressively fast performance with low latency under heavy loads.

- Open-source and free to use, enabling customization and community support.

- Clean and user-friendly interface designed for production use.

- Robust governance features that help manage API usage effectively across teams.

- Flexible plugin system allowing easy extension and customization.

Cons

- Some features like gRPC support are still in development, limiting current transport options.

- Requires some technical knowledge to fully leverage Go-based architecture and plugin development.

- Lacks built-in advanced analytics or AI model evaluation tools without integration with other platforms.

Overall, Bifrost is well-suited for developers and AI teams seeking a fast, lightweight gateway to manage and route requests to multiple LLM providers. It works best for organizations that value open-source solutions and require a balance between speed, governance, and extensibility. Smaller teams or those starting out with AI integrations will appreciate the quick setup, while larger enterprises can benefit from its scalable architecture and governance controls.

Open 'Bifrost' Website

Your membership also unlocks: