About LyzrGPT

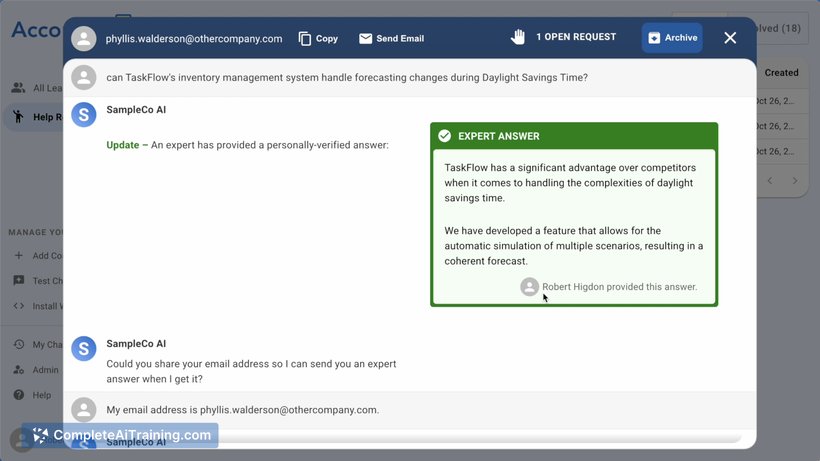

LyzrGPT is a private, enterprise-grade AI chat platform built for security-first teams. It runs inside an organization's own ecosystem so data can remain under the company's control, and it supports switching between multiple AI models within the same conversation.

Review

LyzrGPT targets organizations that need AI chat capabilities without exposing sensitive data to external vendors. The platform emphasizes secure contextual memory, model flexibility, and deployment options that fit enterprise and regulated environments.

Key Features

- Private deployment inside an organization's ecosystem to keep data under customer control.

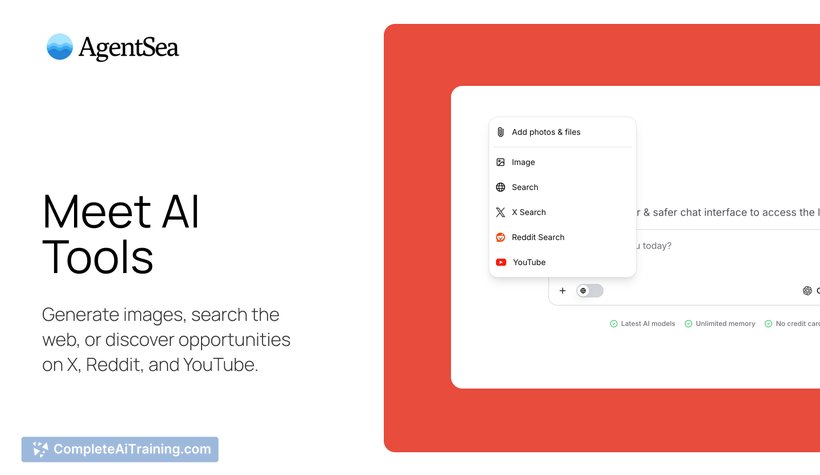

- Model-agnostic switching that lets users alternate between providers (for example, OpenAI and Anthropic) within a single conversation.

- Secure, persistent contextual memory across sessions so conversations can retain relevant history.

- Controls over what contextual data is sent to an external model during inference, with options to limit scope and volume.

- Focus on enterprise and regulated-industry needs, including compliance and access controls.

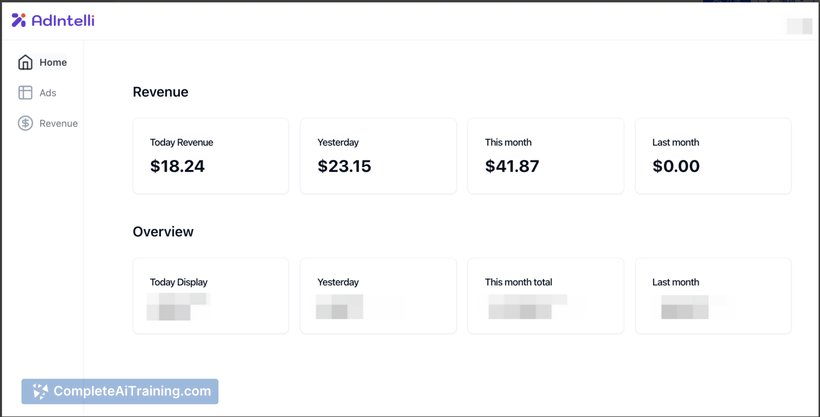

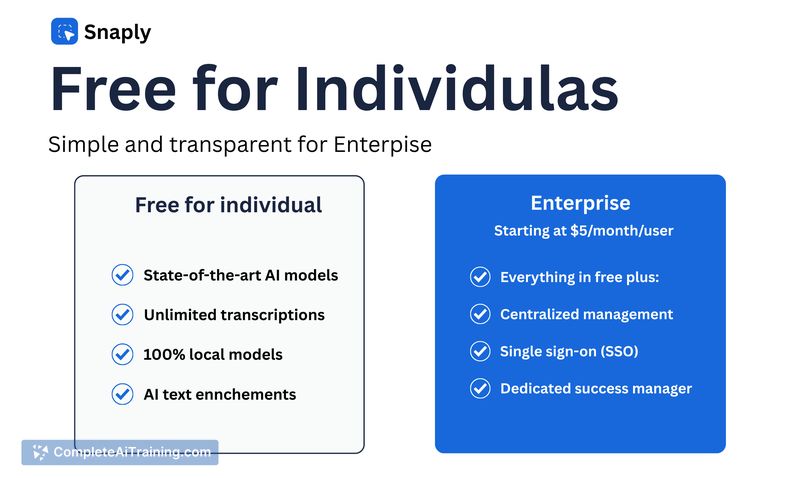

Pricing and Value

Public pricing details are limited; the product page indicates free options and emphasizes enterprise-focused offerings. Value propositions include reduced effort compared with building a complete in-house stack, centralized management of model selection, and keeping original files within corporate infrastructure. Prospective customers should contact the vendor for detailed pricing and deployment plans tailored to their infrastructure and compliance requirements.

Pros

- Strong emphasis on data privacy by enabling deployment inside a customer-controlled environment.

- Ability to switch models in-conversation reduces vendor lock-in and allows selecting the best model for each task.

- Persistent contextual memory improves continuity across sessions and reduces repetitive context entry.

- Explicit controls over what contextual text is sent for inference, which helps meet security and compliance requirements.

- Designed with enterprise and regulated-industry workflows in mind, which may shorten time-to-value versus building a custom solution.

Cons

- Deployment complexity can vary depending on an organization's infrastructure and compliance needs; rollout may require coordination with internal teams.

- If external LLMs are used, selected contextual text is still sent for inference, which some highly security-conscious organizations may prefer to avoid by self-hosting models.

- Some product information such as detailed pricing and onboarding timelines is not fully public and requires direct contact to clarify.

Overall, LyzrGPT is best suited for security-conscious enterprises and regulated organizations that need AI chat while keeping data under their control and avoiding vendor lock-in. Teams evaluating internal AI chat options will find the model-agnostic approach and persistent memory useful, but should confirm deployment and pricing details with the vendor before committing.

Open 'LyzrGPT' Website

Your membership also unlocks: