About Ollama v0.7

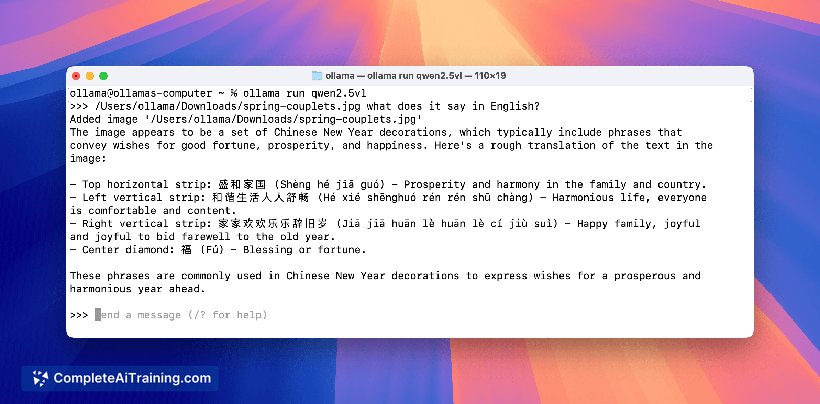

Ollama v0.7 is an AI tool that enables local running of advanced vision models through a newly introduced engine. It focuses on delivering improved reliability, accuracy, and memory management for large language models (LLMs) with multimodal capabilities such as vision. This update supports models like Llama 4 and Gemma 3, allowing users to leverage powerful AI functionalities directly on their own machines.

Review

Ollama v0.7 marks a significant improvement in the platform’s ability to handle vision-based AI models locally. The introduction of a new engine enhances performance and stability, making it a practical choice for users interested in exploring multimodal AI applications without relying on cloud services. It also simplifies the integration of new models, setting a foundation for future expansions beyond vision.

Key Features

- Native support for leading vision models such as Meta’s Llama 4, Google’s Gemma 3, and Qwen 2.5 VL.

- Improved memory management to efficiently run complex large language models locally.

- Enhanced reliability and accuracy for multimodal AI tasks.

- New engine architecture that simplifies adding and running new AI models.

- Future-ready framework that aims to support additional modalities like speech, image generation, and video.

Pricing and Value

Ollama v0.7 operates under a payment-required model, offering a valuable option for those who prioritize local AI processing and privacy. While specific pricing details are not outlined here, the tool’s capability to run advanced models on personal hardware provides a strong value proposition for users who want to avoid ongoing cloud costs and maintain control over their data. This makes it particularly appealing for developers, researchers, and AI enthusiasts with suitable hardware setups.

Pros

- Enables local execution of powerful vision models, reducing dependence on cloud services.

- Supports multiple popular AI models with native integration.

- Improved stability and memory efficiency compared to previous versions.

- Forward-looking design that prepares for additional AI modalities.

- Open source roots encourage community involvement and transparency.

Cons

- Performance depends heavily on the user’s hardware capabilities, limiting accessibility for lower-end machines.

- Pricing details are not fully transparent upfront, which may be a consideration for some users.

- Currently focused mainly on vision models; other modalities are planned but not yet available.

Ollama v0.7 is well-suited for users who require local AI processing with a focus on vision tasks and want to maintain greater control over their data. It is ideal for developers, AI researchers, and privacy-conscious individuals who have the hardware to support demanding models. Those looking for a broader range of multimodal AI features might monitor upcoming updates that expand beyond vision capabilities.

Open 'Ollama v0.7' Website

Your membership also unlocks: