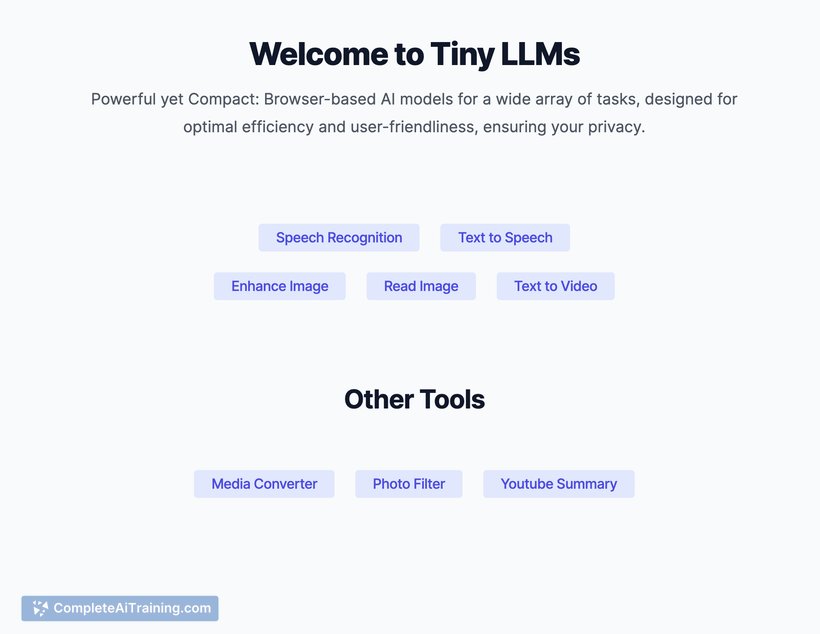

About Tiny LLMs

Tiny LLMs is an AI tool focused on providing lightweight large language models that can run efficiently on limited hardware resources. It aims to offer accessible natural language processing capabilities without the need for extensive computational power.

Review

Tiny LLMs offers a practical solution for users who require language model functionality but are restricted by hardware limitations. The tool balances performance and resource consumption, making it a convenient option for developers and hobbyists seeking compact AI models. Its ease of deployment and relatively small size make it attractive for various applications.

Key Features

- Lightweight language models optimized for low-memory devices

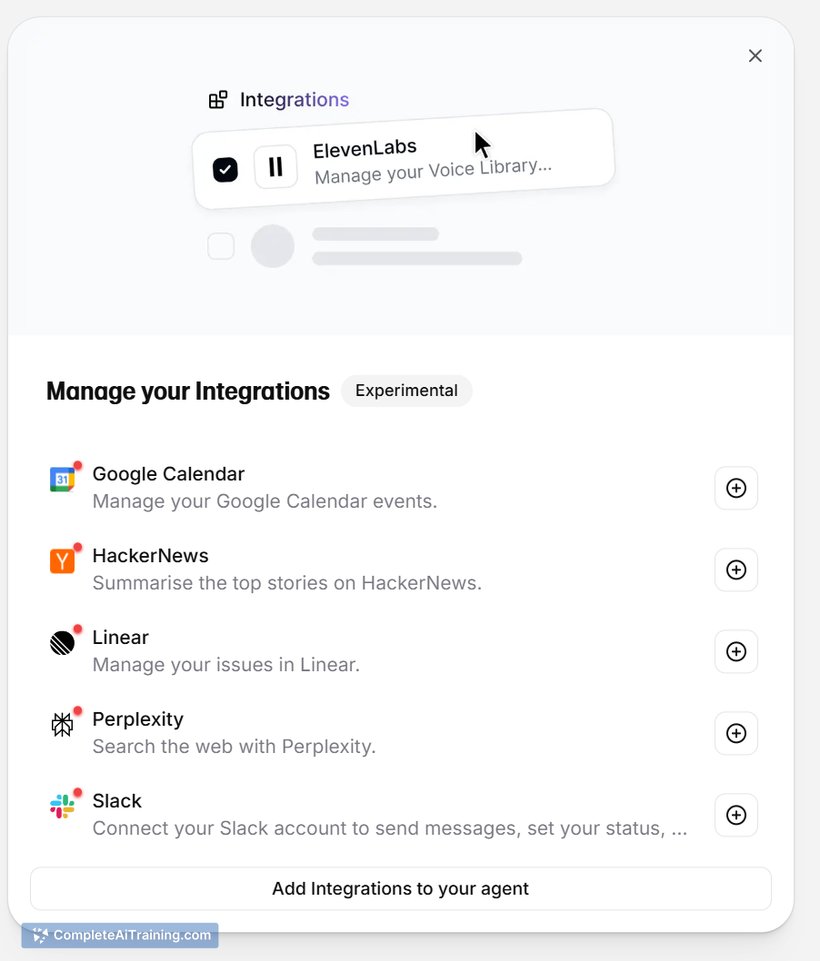

- Compatibility with common machine learning frameworks for easy integration

- Pre-trained models available for quick setup and experimentation

- Support for fine-tuning on custom datasets to improve task-specific performance

- Open-source availability, encouraging community contributions and transparency

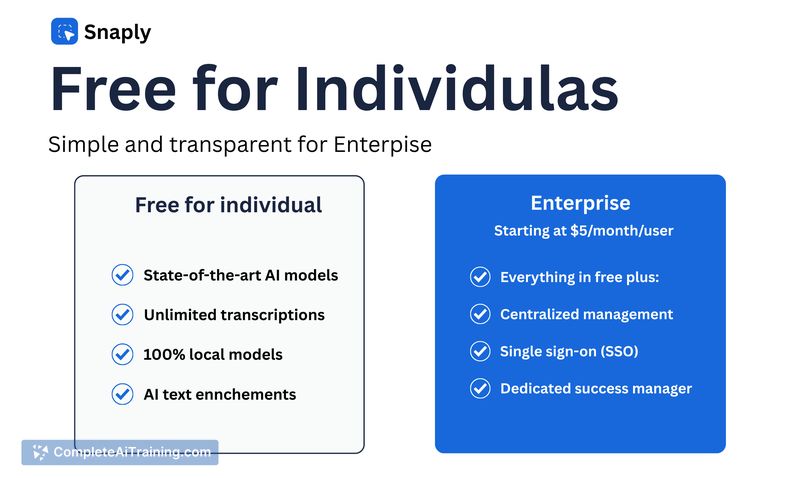

Pricing and Value

The tool is primarily open-source, which allows users to access its capabilities without upfront costs. This approach offers significant value for individuals and organizations looking to experiment with language models without investing in expensive hardware or software licenses. Optional paid services or enterprise support, if available, could further enhance its usability for professional environments.

Pros

- Efficient performance on devices with limited computational resources

- Easy to deploy and integrate with existing projects

- Open-source nature fosters customization and community support

- Pre-trained models reduce the time needed to start using the tool

- Flexibility to fine-tune models for specific tasks

Cons

- Smaller model size may limit accuracy compared to larger language models

- May require technical knowledge to optimize fine-tuning and deployment

- Less suitable for highly complex or large-scale language tasks

Tiny LLMs is well-suited for developers, researchers, and enthusiasts who need accessible language model capabilities on constrained hardware. It is ideal for experimentation, prototyping, and applications where resource efficiency is a priority. Users seeking high-accuracy or large-scale solutions might find it less fitting but can benefit from its ease of use and customization options.

Open 'Tiny LLMs' Website

Your membership also unlocks: