N8N AI Agent Nodes: Practical Workflow Automation and Memory Guide (Video Course)

Transform your automation skills with N8N AI Agent nodes,create bots that remember conversations, access company knowledge, and act with real intelligence. Learn practical setups for support, sales, and onboarding, all with minimal code.

Related Certification: Certification in Building Automated AI Workflows and Knowledge Bases with N8N

Also includes Access to All:

What You Will Learn

- Build agentic workflows with the n8n AI Agent node

- Implement persistent memory using Superbase/Postgres and dynamic Session IDs

- Set up a vector knowledge base (RAG) with chunking and metadata filtering

- Wire chat models, Chat Message Trigger, and action/knowledge tools

- Test, tune, and scale agents while avoiding common pitfalls

Study Guide

Introduction: Why Mastering N8N AI Agent Nodes Matters

If you want to build real-world, intelligent automation, you need more than basic drag-and-drop workflows. You need to know how to give your bots memory, access to knowledge, and the ability to understand and act just like a human assistant. That’s where N8N’s AI Agent nodes come in. This course will walk you step-by-step through every essential concept, setup, and advanced technique for building business-ready AI agents in N8N.

Example:

Imagine you want to automate customer support in your SaaS company. Instead of a simple FAQ bot, you want an agent that remembers past conversations, pulls personalized answers from your internal documents, and extracts important info for follow-up,all with almost no code.

Example:

Or say you run an agency and need to offer tailored chatbots for each client, with access to their unique document repositories, and that seamlessly integrates into their CRM. N8N’s AI Agent nodes make this possible, but only if you understand how memory, knowledge bases, and tool connections really work.

This course is for business people, agency owners, and technical implementers who want to get past “shiny object syndrome” and focus on the proven, practical solutions that work in 95% of real-world use cases. You’ll learn why the AI Agent node is at the core of modern N8N workflows, how to wire it up for memory and knowledge, and how to avoid the common pitfalls that trip up most newcomers. Let’s get started.

Understanding the N8N AI Agent Node: The Foundation

At its heart, the N8N AI Agent node is a special kind of workflow block designed to simulate an intelligent digital agent. It’s what lets your workflow “think” like a chatbot, support rep, or smart assistant. What makes it different from simply calling OpenAI or another LLM? The AI Agent node is built to maintain conversation, use memory, and interact with tools,just like a real agent.

Example:

A basic customer support chatbot that uses the AI Agent node can remember previous interactions, pull up relevant FAQs, and escalate issues if needed,all in one flow.

Example:

An onboarding bot for new employees can walk through a checklist, remember completed steps, and retrieve specific policy documents upon request.

Key Concept: The AI Agent node’s power comes from its three main connections: chat model, memory, and tools. Each one unlocks a new level of capability. Most problems (or failures) come from not wiring these up correctly.

Best Practice: Focus on the core agentic framework,don’t get distracted by dozens of niche nodes or features. Master the AI Agent node deeply and you’ll unlock 80% of what’s possible in N8N AI automation.

Triggering Your Agent: The Chat Message Trigger Node

Your agent doesn’t act on its own,it needs to be triggered. The most common and business-ready way to kick off an N8N AI conversation is with the Chat Message Trigger node. This node starts a workflow whenever a message is sent in the N8N chat interface.

Example:

A user types a question in your web-app’s chat, which passes through the Chat Message Trigger node and starts the AI Agent workflow.

Example:

A sales rep clicks a button in your CRM to “ask the assistant,” sending a message that triggers a new agent session.

Best Practice: Use the Chat Message Trigger for direct, interactive bots. For external triggers (like from your website or another app), use a webhook or custom integration, but always start with the trigger node for clarity.

Chat Models: Choosing Your Agent’s “Brain”

Every AI Agent needs a model to generate text,the “brain” behind the assistant. In N8N, you connect a chat model node to the AI Agent, typically using OpenAI (like GPT-3.5 or GPT-4), Claude, or another LLM.

Example:

Connect OpenAI’s GPT-4 as your model for high-quality customer support.

Example:

Use OpenRouter for flexibility, enabling access to multiple provider models through a single interface.

Best Practice: For most business uses, stick with proven models like OpenAI or Claude. Only explore other models if you hit limits (cost, performance, privacy).

Tip: Configure your model’s system prompt to define your agent’s persona (“You are a helpful assistant for ACME Corp customers”), and set the temperature for the right mix of creativity and accuracy (lower for support, higher for brainstorming).

Memory: Giving Your Agent Context and Recall

Memory is what separates a dumb chatbot from a true assistant. Without it, every user message is treated as the first,there’s no conversation, personalization, or continuity. In N8N, memory is handled by connecting a memory node to your AI Agent.

Example:

A support agent can recall the user’s previous ticket numbers, preferences, and issues across sessions.

Example:

A sales bot can pick up a conversation where it left off, even if the user returns days later.

Types of Memory in N8N:

- Simple Memory: Stores conversation history locally for a single session. Good for quick tests and demos, but not scalable.

- Postgres/Superbase Memory: Stores conversation history in a database, using a dynamic Session ID to tie conversations to specific users or sessions. This is the recommended approach for real-world agents.

Why Use Postgres/Superbase? These databases are robust, cloud-ready, and can handle scaling for many users.

Example:

Your agent is integrated with a CRM,each user has a unique Contact ID. By passing this ID as the Session ID, you ensure all memory is tied to the right user, even if they chat from different devices.

Why Session IDs Matter: Without a dynamic identifier, all conversations blend together or overwrite each other. Always use something unique (like an email, user ID, or CRM contact key) as your Session ID.

How to Set Up Memory:

- Set up a Superbase (cloud Postgres) account and create a database.

- Connect the memory node in N8N to your Superbase instance, using the right table and columns.

- Pass the dynamic Session ID from your trigger or upstream workflow (e.g., from a CRM link) to the memory node.

- Configure context window length,how many previous messages you want to store and retrieve for each conversation. Too short, and your agent feels forgetful; too long, and you waste tokens or risk confusion.

Best Practice: For almost every business case, use Superbase/Postgres for memory, and always wire up a dynamic Session ID. Only use Simple Memory for quick experiments.

Knowledge Bases: Supercharging Your Agent with Company Intelligence (RAG)

If you want your agent to be more than just a chat model, you need a knowledge base,a place where it can “look up” facts, policies, or private documents. In N8N, this is done by connecting a Knowledge Base (Vector Store) tool to your AI Agent node.

Example:

A customer support bot that can answer questions about your product by retrieving relevant sections from your internal documentation.

Example:

An HR agent that can find and summarize specific policies or benefits info, even if scattered across multiple PDFs.

How It Works:

- Documents (PDFs, text files, wikis) are split into “chunks” using a Text Splitter, and each chunk is turned into a vector embedding.

- These vectors are stored in a vector database,commonly Superbase’s vector store.

- The AI Agent node, when faced with a user query, retrieves the top N most relevant chunks from the vector store and uses them as context when generating a response. This is called Retrieval-Augmented Generation (RAG).

Metadata Filtering: One knowledge base can serve multiple agents or use cases by tagging each document chunk with metadata (e.g., “department: HR” or “client: ACME”).

Example:

You have a single knowledge base but want your sales agent to only retrieve documents tagged “sales,” while your support bot only sees “support.”

Example:

A client-facing agent can filter knowledge base responses by client ID, ensuring each user only accesses their own documents.

Tips for Setting Up a Knowledge Base:

- Upload your documents to Superbase, using a tool like Flowise or a custom script to split and embed the text.

- Use metadata fields to tag and filter chunks as needed.

- Set a reasonable document chunk limit in your AI Agent node,too high, and you waste tokens and risk hallucinations; too low, and your agent may miss important info. Test and tune for your use case.

Best Practice: Use a single knowledge base with strong metadata filtering. This keeps your architecture simple and flexible.

Tools: Giving Your Agent Superpowers

Tools are external capabilities you can connect to your AI Agent node. The two main types in N8N are:

- Knowledge Base Tools: (as above) Let agents “search” company data, answer RAG questions, or summarize documents.

- Action Tools: Let agents interact with APIs, fetch info from SaaS platforms, or even trigger actions in other systems.

Example:

Your agent can look up invoice status in your accounting software, or create a new support ticket via API.

Example:

A chatbot for internal IT can check the status of a server, reset a password, or fetch a knowledge base article.

Best Practice: Start with knowledge base tools for Q&A and information retrieval. Add more action tools as your agent’s scope grows.

Session IDs: The Secret to Multi-User, Multi-Channel Agents

Everything about memory and context hinges on using a proper Session ID. This unique identifier ensures each user’s conversation history is kept separate,even if multiple people are chatting with your agent at the same time.

Example:

You integrate with a CRM like High Level; each contact has a unique URL with an ID. Pass this as the Session ID to N8N.

Example:

A web app with user accounts can pass the user’s email or UUID as the identifier.

Common Mistake: Using a static or missing Session ID means all users share the same memory,confusing everyone and leaking data.

Best Practice: Always pass a dynamic, unique identifier from your front-end or integration layer into N8N, and configure your memory node to use it.

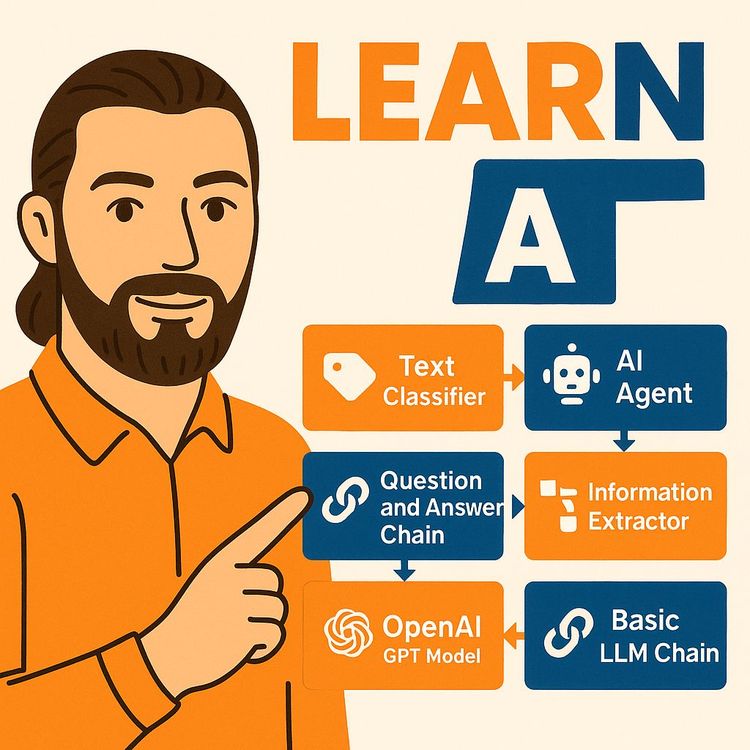

The Most Useful AI Nodes in N8N (And When to Use Each)

While there are many AI-related nodes in N8N, only a handful are worth mastering for most business scenarios. Here’s how to focus:

1. AI Agent Node: As already discussed, this is the core for any agentic workflow,memory, tools, context, and persona all in one.

Example:

A customer support assistant that uses memory and knowledge base for deep context.

Example:

A personalized onboarding bot that can answer questions about company policies and track progress.

2. OpenAI Node: This node is a general-purpose wrapper for sending text to OpenAI models. It’s great for simple completions or single-turn chat, but it lacks built-in memory.

Example:

Summarize a long email thread with a one-off OpenAI call.

Example:

Generate a creative blog post outline from a prompt.

Tip: Use the OpenAI node for simple generation tasks or when you need full control over prompts and output (e.g., structured JSON).

3. Information Extractor Node: This node is extremely useful for pulling structured data (like emails, account numbers, names) from messy text. It can also use conversation history as context.

Example:

Extract a customer’s account number from a chat transcript.

Example:

Pull email addresses, dates, or addresses from a support ticket.

Tip: Always provide both the latest user input and conversation history if the info you want to extract might have occurred earlier.

4. Text Classifier Node: This node categorizes user input by intent or topic, enabling routing to the right workflow or agent.

Example:

Detect whether a user wants “product info,” “technical support,” or “billing question” and route accordingly.

Example:

Classify incoming messages as spam, urgent, or informational.

Tip: Use for high-level routing at the start of a workflow,connect to different agents or pipelines.

5. Basic LLM Chain / Question and Answer Chain: These are rarely used in most agency/business scenarios. They allow for multi-step prompt chaining or simple Q&A, but usually the AI Agent node is more flexible.

Example:

A research assistant that asks clarifying questions before providing an answer.

Example:

Chaining a data extraction step with a summarization step.

6. Sentiment Analysis, Summarisation Chain: The source notes these are almost never used in practical business settings.

Example:

Detecting if a user is angry or happy (sentiment) in special use-cases.

Example:

Summarizing a long document (rarely needed in agentic workflows).

Best Practice: Focus your learning on the AI Agent, OpenAI, Information Extractor, and Text Classifier nodes. You’ll cover 90% of real-world needs.

Practical Demonstration: Setting Up Memory and Knowledge Base in N8N

Let’s walk through a practical setup for a business-grade conversational agent:

- Configure your Chat Message Trigger to accept input from a CRM or chat interface, and ensure it passes a unique Session ID.

- Connect your AI Agent node. Set the system prompt, attach your preferred chat model, and wire up the memory node (Superbase/Postgres) using the Session ID from step 1.

- Set context window length to a value that covers the typical conversation length (e.g., 10-20 messages).

- Set up a Superbase vector store for your knowledge base. Use a tool like Flowise to upload your documents, split them into chunks, and add metadata tags.

- Connect the Retrieve Document tool to your AI Agent node, pointing it at your vector store and configuring metadata filters as needed.

- Test the workflow by sending user queries and confirming the agent recalls previous context, retrieves relevant document chunks, and responds accurately.

Example:

A customer asks about a specific refund policy. The agent remembers their last issue, retrieves the exact policy excerpt from the knowledge base, and provides a personalized answer.

Example:

A sales prospect chats with your bot twice over a week. The agent greets them by name, recalls past questions, and sends a follow-up PDF from the knowledge base.

Best Practice: Always test with real, messy data and long conversations. Ensure your memory and knowledge base work seamlessly together.

Common Pitfalls and How to Avoid Them

1. Shiny Object Syndrome: Don’t try to learn or implement every possible node or feature. Focus on what works for 90% of business use cases,AI Agent, OpenAI, Information Extractor, and Text Classifier.

Example:

Resist the urge to set up every memory or vector store option; stick to Postgres/Superbase unless you have a specific need.

2. Static or Missing Session IDs: If you don’t pass a unique identifier, your agent will mix up conversations and leak context.

Example:

A support bot that shows one customer another’s order history,avoid this by always wiring up dynamic Session IDs.

3. Oversized Knowledge Base Chunks: Setting the chunk limit too high wastes tokens and can cause hallucinations.

Example:

Your agent retrieves 20 chunks per query, leading to high model cost and confusing, irrelevant answers. Start small and tune upwards.

4. Not Using Metadata Filtering: If you don’t tag your documents, you can’t control what the agent retrieves.

Example:

All agents see all documents, even confidential ones. Use metadata to separate access cleanly.

5. Forgetting to Pass Conversation History to Extractor Nodes: If the info you want was said in an earlier turn, always pass the full history.

Example:

Trying to extract an account number from a single message, when it was actually mentioned two messages ago.

Advanced Use Cases: Bringing It All Together

When you master memory, knowledge bases, and tool integration, you unlock advanced workflows:

1. Multi-Agent Routing: Use the Text Classifier node to detect intent (“sales”, “support”, “technical”) and route to different specialized agents, each with their own memory and knowledge base filters.

Example:

A user asks a billing question,routed to the finance agent; asks about features,routed to the product agent.

2. Dynamic API Actions: Add tools that let the agent look up live data, trigger workflows, or make external API calls.

Example:

A support agent checks order status in real time, or books a meeting in your calendar app.

3. Personalized, Multi-Session Conversations: With robust memory and Session IDs, your agent can continue conversations across channels and time.

Example:

A returning customer is greeted by name and offered support based on their last inquiry, even if they switch devices.

Tips for Scaling and Maintaining N8N AI Agents

1. Use Superbase/Postgres for all persistent memory and knowledge base needs. It’s scalable and cloud-ready.

2. Standardize on metadata schemas for all documents. Define clear tags for departments, clients, and privacy levels.

3. Regularly audit your vector store and memory tables. Remove outdated chunks and check for access issues.

4. Monitor token usage and context window sizes. Tune for cost and accuracy.

5. Document your workflows and Session ID logic. Make it easy for others to understand how context and memory are managed.

Conclusion: From Beginner to Pro with N8N AI Agent Nodes

You’ve worked through the full spectrum of building business-ready AI agents in N8N,from wiring up basic chat triggers to configuring advanced memory and knowledge bases, to connecting practical tools for extraction and classification. You now know exactly which nodes to focus on, how to avoid the traps of “shiny object syndrome,” and how to ensure your agents are context-aware, secure, and effective.

Key Takeaways:

- The AI Agent node is the foundation for almost all agentic workflows in N8N,master it deeply.

- Use Superbase/Postgres for memory and knowledge bases, always with dynamic Session IDs for multi-user support.

- Metadata filtering is the secret weapon for flexible, scalable knowledge bases.

- Focus on the proven nodes (AI Agent, OpenAI, Information Extractor, Text Classifier) for 90% of business needs.

- Test your agents with real data, and always pass relevant context and history.

The real power comes from applying these skills to business problems,automating support, onboarding, sales, research, and more. Don’t get lost in endless experimentation. Pick the right tools, wire them up properly, and deliver real results. That’s how you become a pro in N8N AI Agent workflows.

Frequently Asked Questions

This FAQ section is designed to address the most common questions and concerns about working with N8N’s AI Agent nodes, from the basics to advanced implementation strategies. It’s structured to guide both newcomers and experienced professionals through key concepts, technical setup, workflow optimization, and best practices for building practical AI-powered solutions in business environments.

What is the core concept of the AI Agent node in N8N?

The AI Agent node acts as a framework for building conversational and task-oriented AI agents within N8N workflows.

It allows you to combine AI models, tools, and memory to create dynamic, context-aware interactions. The node enables you to define how an agent behaves, integrates with company data or software, stores conversation history, and takes action based on user input. This modular approach lets you design agents that can answer questions, automate tasks, and interact with external systems, making it highly adaptable for business use cases.

How do AI models and memory function within the AI Agent node?

AI models generate responses, while memory provides conversation context for coherent interactions.

You select an AI model (such as OpenAI’s GPT-4) to handle language generation. Memory,often using a database like PostgreSQL (typically via Superbase),stores the chat history, linked to each user or session by a unique session ID. This means the agent remembers past messages, enabling it to answer follow-up questions, reference previous discussions, and deliver more personalized, informed responses over time.

What role do tools and knowledge bases play in empowering an AI agent?

Tools extend the agent’s capabilities, while knowledge bases provide access to your organization’s information.

Tools let the agent perform actions (like making API calls or interfacing with external apps). Knowledge bases,usually set up as vector stores,enable the agent to search and retrieve relevant content from your documents or databases. For example, a support agent can pull details from product manuals or company policies stored in the knowledge base, answering user queries with accurate, context-rich information.

How is a vector knowledge base set up using Superbase in N8N?

Connect N8N to your Superbase project, create tables for storing document chunks and embeddings, and upload your documents.

You’ll use API keys and URLs to connect N8N to Superbase, then run an SQL script (from N8N docs) in the Superbase SQL editor to create the vector store tables. Documents can be processed with tools like Flowise, splitting them into chunks with metadata and embeddings, then upserted into Superbase. This setup lets your agent semantically search and retrieve relevant information during conversations.

What is the purpose of metadata when using a vector knowledge base?

Metadata enables precise filtering and retrieval of relevant document chunks.

By attaching metadata (like “product_info” or “policy_doc”) to each chunk, you can instruct your agent or retrieval tool to focus only on certain types of documents. For example, if a user asks about product specs, the agent can search only chunks tagged with “product_info,” improving response accuracy and relevance,especially in large or mixed-document knowledge bases.

How does the OpenAI node differ from the AI Agent node?

The OpenAI node is a direct interface to OpenAI models, while the AI Agent node offers memory and tool integration.

The OpenAI node is great for simple, single-turn interactions or text generation, but it lacks built-in memory and cannot natively handle multi-step workflows or tool integrations. The AI Agent node, by contrast, is designed for building sophisticated agents that can remember conversation history, use tools, and access knowledge bases,all within one flexible framework.

When might you use the Basic LLM Chain node?

The Basic LLM Chain node is best for breaking tasks into sequential, multi-step processes.

If you need granular control,such as generating an outline, then expanding each section iteratively,the LLM Chain lets you chain outputs from one step as inputs to the next. It’s less common for conversational agents but valuable for structured content generation or workflows where each step depends on the previous output.

What is the primary function of the Text Classifier node?

The Text Classifier node is primarily used for intent classification.

It analyzes user input and assigns it to predefined categories (such as “sales inquiry,” “support,” or “general information”). This allows your workflow to route requests to specialized agents, trigger certain tools, or apply filters,helping deliver more relevant, context-aware responses. For instance, a “support” intent could send the conversation to an agent with access to technical documentation.

What is the primary purpose of the Chat Message trigger node in N8N workflows?

The Chat Message trigger node activates workflows in response to messages sent within the N8N interface chat.

When a user types a message, this node initiates the workflow, passing the message to subsequent nodes for processing. This enables you to build interactive chat-based solutions, such as virtual assistants or helpdesk bots, directly inside N8N.

What are the two primary categories of tools used with AI agents in N8N?

The two main categories are knowledge base tools and action tools.

Knowledge base tools let the agent search and retrieve information from document stores or databases. Action tools enable the agent to perform external operations, such as making API calls, updating records, or sending notifications. This distinction helps you design workflows where agents can both answer questions and take practical actions.

What is the most common database system for memory in N8N AI agents, and which cloud-hosted tool is typically used?

PostgreSQL is the preferred database for memory, commonly deployed via Superbase.

Using Superbase provides a scalable, cloud-based PostgreSQL database where conversation history can be reliably stored and retrieved, facilitating persistent memory for AI agents across sessions and users.

How is conversational history and user identification handled in N8N AI agents integrated with a CRM?

A unique identifier (like a Contact ID from the CRM) is used to manage user sessions and conversation history.

This ID is passed into N8N and stored in the memory database, ensuring that conversation context is linked to the right user. For instance, if a CRM page has a unique URL with a contact ID, you extract that and use it as the session ID,allowing the agent to remember prior discussions with that user.

What does the context window length setting control in the AI Agent node’s memory configuration?

The context window length sets how many recent conversation turns are stored and used as context for the AI model.

A longer window provides more context but uses more tokens (which may increase costs and risk information overload). Shorter windows limit memory but can focus the agent’s responses. Typical settings involve balancing relevance with cost and performance.

Why is metadata important when setting up a Superbase vector store for a knowledge base in N8N?

Metadata lets you filter and retrieve only the most relevant document chunks for each query.

For example, if your knowledge base contains product manuals, HR policies, and technical docs, you can tag each chunk with its document type. The agent or retrieval tool can then fetch only the relevant chunks, improving both speed and accuracy of responses.

What is the most common use case for the Text Classifier node?

Intent classification is the main use case for the Text Classifier node.

It helps the workflow determine what the user is trying to accomplish (e.g., “order status,” “technical support”) and routes the conversation or triggers actions accordingly. For example, a request classified as “billing” can send the user to an agent with access to billing documentation and tools.

What is a disadvantage of providing too many document chunks to the AI Agent node’s knowledge base tool?

Passing too many chunks can increase costs (more tokens used) and cause hallucination by overwhelming the model with irrelevant information.

It’s important to set chunk retrieval limits and filter effectively, so the agent only receives the most relevant context, keeping responses focused and accurate.

Why does the OpenAI node in N8N lack built-in memory compared to the AI Agent node?

The OpenAI node is designed for stateless, single-turn interactions.

It does not maintain conversation history or manage session context. Although you can reference prior messages by passing them in manually, true integrated memory (with features like session management) is only available in the AI Agent node, which was built for complex, multi-turn workflows.

Why might you need to provide both user input and conversation history when using the Information Extractor node?

Key information may be spread across multiple conversation turns.

For example, a user’s account number might be provided earlier in the chat. By supplying the full history, the extractor can find and pull relevant details, even if they’re not in the current message,leading to more accurate data extraction and workflow automation.

Why is memory important in building conversational AI agents in N8N, and how does Simple Memory differ from using an external database like Superbase/Postgres?

Memory is crucial for maintaining context across conversations, enabling personalized and coherent interactions.

Simple Memory stores data locally and is mainly for testing or single-user scenarios; it’s not persistent or scalable. Using Superbase/Postgres allows for multi-user support, persistence across sessions, and better integration with other business tools. In both cases, the session ID is essential for linking conversation history to specific users.

What is the difference between setting up memory and a knowledge base (vector store) for an AI agent in N8N?

Memory stores chat history for individual users, while a knowledge base stores documents for semantic retrieval.

Memory is typically set up with a database like Postgres, storing messages by session ID. The knowledge base requires a vector store (also often in Superbase), with document chunks and embeddings, allowing the agent to search and retrieve relevant content. Both work together: memory ensures context, and knowledge base gives access to your organization’s information.

What are the trade-offs in choosing context window length and number of document chunks retrieved from a knowledge base?

Longer context windows and more chunks provide richer information but increase costs and risk information overload.

If the window is too large, responses may be slower and less relevant. Too many chunks can lead to hallucination or token limit issues. The best approach is to experiment, starting with moderate settings and adjusting based on user feedback and conversation quality.

Why is structured output (like JSON) often preferred when using LLMs via the OpenAI node in N8N?

Structured output makes it easier for downstream nodes to parse and act on AI responses.

For example, if you need to extract an email address, having the model return { "email": "example@domain.com" } lets you automate follow-up actions. You implement this by instructing the model (in the system prompt) to format its output in JSON or another machine-readable structure.

What are common challenges when connecting N8N to Superbase for memory or knowledge base setup?

Authentication errors, incorrect API keys, and misconfigured SQL tables are frequent issues.

Always verify your Superbase project’s API credentials, double-check that the SQL script from the documentation has been executed, and ensure your tables contain the required fields for embeddings and metadata. Testing the connection with a simple query is a good first step to troubleshooting.

What are some practical business applications for N8N AI Agent nodes?

N8N AI Agent nodes can power internal helpdesks, automate customer support, handle sales queries, and even generate reports or summaries.

For example, a company could deploy an AI agent to answer HR policy questions for employees, pull specific answers from internal documentation, or handle lead qualification in a CRM by routing inquiries based on intent classification.

What’s a common misconception about the AI Agent node in N8N?

Many think it’s just for chatbots, but it can automate complex business processes.

The AI Agent node can interact with APIs, update databases, and trigger external workflows, not just respond to chat messages. Think of it as a smart automation engine that can reason, remember, and take meaningful action across your digital ecosystem.

What is dynamic routing in the context of N8N AI Agent workflows?

Dynamic routing means directing user requests to different agents, tools, or knowledge bases based on intent.

For instance, if a user’s message is classified as “technical support,” the workflow routes it to an AI agent with access to technical documents. This ensures that each inquiry is handled by the most relevant process, improving efficiency and user satisfaction.

How can hallucination (fabricated answers) be minimized in AI Agent workflows?

Filter retrieved document chunks, limit the amount of context, and fine-tune prompts for accuracy.

If the agent pulls too much or irrelevant data, it’s more likely to make up answers. Use metadata filtering, set sensible limits on document retrieval, and provide clear system prompts instructing the model to only answer when certain.

What are best practices for managing session IDs in multi-user workflows?

Always use unique, consistent identifiers (like CRM Contact IDs) and store them securely.

Avoid using user names or emails directly for privacy. If integrating with webhooks or chat interfaces, extract a stable ID from the payload or URL to ensure each user’s history is distinct and reliably accessible.

Can I use LLMs other than OpenAI’s in N8N AI Agent nodes?

Yes, you can connect to alternative providers like OpenRouter for access to various LLMs.

This flexibility lets you experiment with different models, optimize for cost or performance, and avoid vendor lock-in. Just ensure the node supports your chosen provider’s API and authentication requirements.

What is the purpose of the Text Splitter in preparing documents for a vector knowledge base?

The Text Splitter breaks long documents into smaller, manageable chunks for efficient embedding and retrieval.

This chunking is essential for semantic search: instead of searching entire documents, the agent can retrieve only the most relevant sections, improving both speed and answer quality.

How do you update or add new documents to an existing vector knowledge base in Superbase?

Process new documents into chunks and embeddings, then upsert them into the Superbase table.

This ensures your knowledge base stays current. Upserting means new chunks are added, and existing ones with matching keys are updated,streamlining content management as your business information evolves.

How can you control token usage and costs in AI Agent workflows?

Limit context window length, restrict the number of retrieved chunks, and use lower temperature settings for deterministic outputs.

Monitor token usage with logging tools, and regularly review workflow performance. For example, reducing the number of retrieved knowledge base chunks can significantly cut token consumption without harming answer quality.

How do you ensure security and privacy of sensitive data in N8N AI Agent memory and knowledge bases?

Use encrypted connections to your databases, restrict access via API keys, and avoid storing unnecessary personal data in memory or knowledge bases.

Regularly audit your Superbase projects and N8N instance for access controls. For sensitive workflows, consider anonymizing data or applying strict metadata filters to prevent exposure of confidential content.

What are some real-world examples of using intent classification in business workflows?

Intent classification can route sales leads, triage support tickets, or trigger specific onboarding flows.

For instance, if a user’s message is classified as “pricing inquiry,” the workflow can send them to a sales agent or provide an automated quote, streamlining customer journeys and reducing manual triage.

What should I check if my AI Agent isn’t responding as expected?

Verify your model and memory connections, check session ID handling, review workflow logs, and ensure retrieved context is relevant and not empty.

Common issues include misconfigured database credentials, missing or inconsistent session IDs, or errors in document chunking. Use N8N’s built-in logging to trace where the process may be breaking down.

Should I use pre-built AI templates from N8N’s library?

AI templates can be useful for inspiration, but always review and adapt them to your specific needs.

Templates may not follow best practices for your use case or data privacy requirements. Use them as a starting point, but understand each node’s configuration before deploying in a production environment.

What’s the best way to test and iterate on AI Agent workflows?

Start with sample data, use small user groups, and monitor agent outputs closely.

Test edge cases (like ambiguous queries), review responses for accuracy, and adjust context window length, chunk limits, or system prompts as needed. Incorporate user feedback to refine workflow logic and improve overall performance.

Certification

About the Certification

Become certified in N8N AI Agent Nodes and demonstrate the ability to build intelligent bots, automate workflows, and deploy dynamic knowledge bases for support, sales, and onboarding,all with practical, low-code solutions.

Official Certification

Upon successful completion of the "Certification in Building Automated AI Workflows and Knowledge Bases with N8N", you will receive a verifiable digital certificate. This certificate demonstrates your expertise in the subject matter covered in this course.

Benefits of Certification

- Enhance your professional credibility and stand out in the job market.

- Validate your skills and knowledge in cutting-edge AI technologies.

- Unlock new career opportunities in the rapidly growing AI field.

- Share your achievement on your resume, LinkedIn, and other professional platforms.

How to complete your certification successfully?

To earn your certification, you’ll need to complete all video lessons, study the guide carefully, and review the FAQ. After that, you’ll be prepared to pass the certification requirements.

Join 20,000+ Professionals, Using AI to transform their Careers

Join professionals who didn’t just adapt, they thrived. You can too, with AI training designed for your job.