Video Course: Understanding and Effectively Using AI Reasoning Models

Dive into AI reasoning models that go beyond data processing. This course equips you with the skills to apply these powerful tools for complex problem-solving, from coding to data analysis, transforming your projects with advanced AI insights.

Related Certification: Certification: Proficient Application of AI Reasoning Models in Practice

Also includes Access to All:

What You Will Learn

- Differentiate next-word prediction from RL-on-Chain-of-Thought scaling

- Apply Chain-of-Thought prompting and effective "what"-focused prompts

- Use 01-model features like reasoning effort, tool calling, and structured outputs

- Identify and evaluate key use cases: coding, planning, data analysis, and research

Study Guide

Introduction

Welcome to the comprehensive guide on Understanding and Effectively Using AI Reasoning Models. This course is designed to take you from a beginner's understanding to a deep, practical knowledge of how these models function and how they can be applied in various domains. As we move further into the era of artificial intelligence, the ability to leverage AI reasoning models becomes increasingly valuable. These models are not just about processing data; they are about understanding and reasoning, making them powerful tools for complex problem-solving. By the end of this course, you'll be equipped with the skills to harness these models effectively in your own projects.

The Shift from Next Word Prediction to Reasoning

The traditional approach to scaling large language models (LLMs) has centered around next word prediction. This paradigm involves training models to predict the next token in a sequence, which inadvertently teaches them a variety of skills, including grammar, world knowledge, and even some reasoning capabilities. However, this method is often compared to "system one thinking": fast and intuitive but not well-suited for complex reasoning tasks.

For example, while next word prediction can help a model complete a sentence grammatically, it struggles with problems requiring deeper logical reasoning, such as solving a complex math problem or understanding nuanced sentiments in text.

In contrast, the new reasoning models utilize reinforcement learning on Chain of Thought (CoT) prompting. This approach shifts the focus from merely predicting the next word to engaging in a structured reasoning process. By training models on datasets with explicitly correct answers, such as coding problems, these models are rewarded for generating correct solutions through step-by-step reasoning.

Chain of Thought Prompting as a Workaround

Chain of Thought (CoT) prompting is a technique developed to enhance the reasoning capabilities of LLMs. It encourages models to "think step by step," generating intermediate reasoning steps that mimic human problem-solving processes. This approach is akin to enforcing "system two thinking," which is more deliberate and resource-intensive.

For instance, when tackling a complex math problem, a model using CoT prompting might break down the problem into smaller, manageable steps, storing intermediate information as tokens. This method allows the model to arrive at a more accurate solution than it would by relying solely on next word prediction.

Another example can be seen in language translation tasks, where CoT prompting helps the model maintain context and meaning across longer sentences, improving translation accuracy.

The New Scaling Paradigm: Reinforcement Learning on Chain of Thought

The latest generation of reasoning models, such as OpenAI's 01 and 03, are built on the foundation of reinforcement learning (RL) on Chain of Thought. This method involves training models on datasets with explicitly correct answers and using a "grader" to verify the correctness of the model's output.

Correct solutions, along with the CoT leading to them, are rewarded through reinforcement learning, adjusting the model's weights to favor trajectories that produce verifiably correct answers. This process involves multiple forward passes and rewards for correct chains of thought, leading to models with significantly stronger reasoning capabilities.

For example, in a coding task, the model might generate several potential solutions, each with its own chain of thought. The grader evaluates these solutions, rewarding the model for the correct ones, thereby reinforcing the pathways that led to success.

Similarly, in a medical diagnosis scenario, the model could analyze symptoms step-by-step, using CoT to consider various diagnoses before arriving at the most likely conclusion.

Rapid Benchmark Saturation

One of the most striking developments with these new reasoning models is their ability to saturate benchmarks at a much faster rate than previous generations. For instance, benchmarks that once took years to master are now being conquered in roughly a year, as highlighted by David Rain's work on GP QA (Google Proof QA).

This rapid progress indicates the enhanced capabilities of these models and suggests we are still in the early stages of their potential. As these models continue to evolve, we can expect even more impressive feats in terms of problem-solving and reasoning abilities.

Consider the example of a benchmark designed to test logical reasoning. Traditional models might take years to achieve high scores, while new reasoning models can master these benchmarks in a fraction of the time, demonstrating their superior reasoning capabilities.

Effective Prompting of Reasoning Models

When working with reasoning models like OpenAI's 01 series, it's crucial to adopt a different approach to prompting compared to traditional chat models. As Ben Hilaac and Swix emphasize, you should focus on the "what" (the desired outcome) rather than the "how" (the reasoning process).

Effective prompts for reasoning models should include an explicit goal, the desired return format, warnings, and all relevant context. Avoid instructing the model on specific reasoning styles or telling it how to think step-by-step.

For example, if you're using a reasoning model to generate a financial report, provide clear instructions on the report's structure and the data it should include. Let the model determine the reasoning process internally, rather than specifying each step it should take.

In another scenario, when asking a model to solve a complex math problem, provide the problem statement and desired solution format, but refrain from guiding it through each calculation step.

Usage and Features of 01 Models

OpenAI's 01 models offer several features that make them well-suited for tasks requiring structured outputs. These models are available via the API, including variants like 01, 01-mini, and 01-one. Notably, 01-mini does not support system messages.

The 01 model allows setting a "reasoning effort" parameter (low, medium, high), which influences the amount of computation and tokens used, affecting response latency and quality.

For instance, when generating a structured output, such as a JSON file, you can define a schema using Pydantic, allowing the model to return data in a structured format. This feature is particularly useful for tasks like data analysis or report generation.

Additionally, 01 models support tool calling, enabling the integration of external tools to extend the model's capabilities. This feature is beneficial for complex workflows, such as automating data processing tasks or integrating with other software systems.

Key Use Cases for Reasoning Models

Reasoning models excel in a variety of applications, thanks to their enhanced reasoning capabilities. Some of the most promising use cases include:

- Coding: These models perform exceptionally well on complex coding tasks, including one-shot generation or editing of entire files. Their performance on coding benchmarks like SBench is impressive.

- Planning and Agents: Reasoning models are ideal for upfront planning in agentic workflows, laying out subsequent steps for execution by other models or processes.

- Reflection over Sources: They can perform deep analysis and extract insights from large amounts of text, such as meeting notes or documents.

- Data Analysis: These models are adept at analyzing complex datasets, with applications ranging from blood test analysis to medical reasoning.

- Research and Report Generation: Their strong reasoning capabilities make them perfect for conducting in-depth research and generating comprehensive reports.

- LMs as Judge/Evaluators: Leveraging their reasoning capabilities, these models can evaluate the output of other models or processes.

- Cognitive Layer over News Feeds: They can filter and analyze news and social media data to identify trends and relevant information, such as the 01 Trend Finder.

For example, in a research setting, a reasoning model could be used to generate a detailed report on a specific topic, analyzing various sources and synthesizing the information into a coherent document.

In another case, a reasoning model might be employed to plan a complex project, outlining the necessary steps and resources required for successful execution.

Distinguishing Chat Models from Reasoning Models

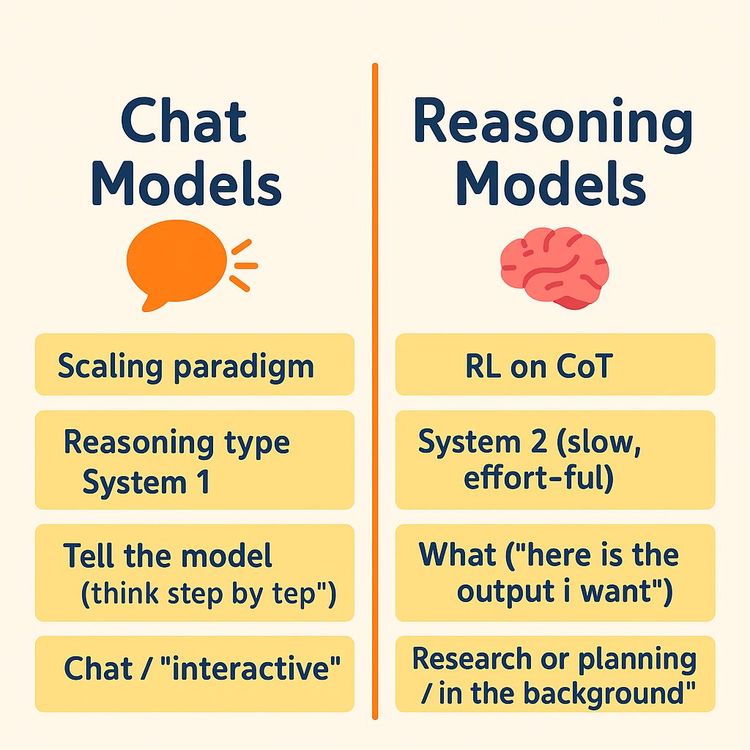

It's essential to understand the differences between chat models and reasoning models to leverage their unique strengths effectively. The course concludes by contrasting these models across several dimensions:

- Scaling Paradigm: Chat models use next token prediction, while reasoning models use RL over CoT.

- Reasoning Type: Chat models are fast and intuitive ("system one"), whereas reasoning models are slow and effortful ("system two").

- Prompting: Chat models are instructed on "how" to think, while reasoning models are told "what" is wanted.

- Interaction Mode: Chat models are interactive, while reasoning models perform deeper tasks with less direct interaction.

- Best Suited For: Chat models are ideal for interactive conversations, whereas reasoning models are better for research, planning, and background agents.

For instance, a chat model might be used for customer support, engaging in a real-time conversation with users, while a reasoning model could be employed for generating a detailed business plan, requiring more in-depth analysis and reasoning.

Conclusion

In conclusion, the emergence of AI reasoning models trained with reinforcement learning on Chain of Thought marks a significant advancement in AI capabilities. These models, exemplified by OpenAI's 01 series, offer enhanced reasoning abilities and are particularly well-suited for complex, effortful tasks that require more than just intuitive next-token prediction.

By understanding the distinct characteristics of these models and adopting appropriate prompting strategies, you can effectively leverage their potential across a wide range of applications, from coding and planning to research and data analysis. The rapid saturation of benchmarks underscores the speed of innovation in this field, making it an exciting area for continued exploration and application.

As you move forward, remember that the thoughtful application of these skills will enable you to harness the full power of AI reasoning models, driving innovation and solving complex problems in ways that were previously unimaginable.

Podcast

Frequently Asked Questions

Welcome to the FAQ section for the 'Video Course: Understanding and Effectively Using AI Reasoning Models'. This resource is designed to address common questions about AI reasoning models, from foundational concepts to advanced applications. Whether you're new to AI or a seasoned professional, this guide aims to enhance your understanding and application of these innovative models.

1. What is the fundamental difference in the scaling paradigm between traditional large language models (LLMs) and these new "reasoning models"?

Traditional LLMs primarily scale based on "next word prediction," training models to predict the next word in a sequence, which helps them learn grammar, world knowledge, and some reasoning as they grow in size and data. Reasoning models, however, use reinforcement learning with "Chain of Thought" (CoT) prompting, focusing on datasets with correct answers and rewarding models for generating solutions through step-by-step reasoning.

2. How does "Chain of Thought" prompting help LLMs solve more complex problems, and how does it relate to "system one" and "system two" thinking?

"Chain of Thought" prompting encourages LLMs to explicitly generate intermediate steps in problem-solving, akin to human thought processes for complex tasks. This method shifts the model from fast, intuitive "system one" thinking to more deliberate "system two" reasoning, allowing it to tackle problems that require deeper analysis.

3. What is the key mechanism behind the improved reasoning capabilities of these new models, particularly concerning reinforcement learning?

The improved reasoning capabilities stem from training models using reinforcement learning on data with explicitly correct answers. Models generate multiple reasoning pathways, verified by a grader. Correct answers result in rewards, adjusting the model's internal parameters to favour accurate reasoning.

4. Why are benchmarks becoming saturated more quickly, and what does this tell us about the progress of reasoning models?

Benchmarks are saturating because reasoning models rapidly achieve high performance on them, indicating significant advancements in problem-solving capabilities. This suggests that we are still early in the scaling curve for these models, with potential for further improvements.

5. How should one approach prompting these new reasoning models (like the O1 family) differently compared to traditional chat models?

With reasoning models, focus on what you want as the output, providing a clear goal and context, rather than instructing on how to think. This contrasts with chat models, where step-by-step instructions are common.

6. What are some key use cases where these reasoning models excel, and why are they particularly well-suited for these applications?

Reasoning models excel in complex coding tasks, planning workflows, deep information analysis, data diagnostics, research, and report generation. They are well-suited for these tasks due to their ability to perform effortful reasoning and produce high-quality outputs.

7. How does interacting with a reasoning model differ from a chat model in terms of interaction style and expectations around latency?

Chat models are interactive and quick, suitable for back-and-forth dialogue. Reasoning models handle deeper tasks autonomously, with higher latency due to extensive internal processing, focusing on high-quality outputs rather than immediate interaction.

8. If I'm already using other large language models, when might it be beneficial to consider integrating one of these new reasoning models into my applications or workflows?

Consider integrating reasoning models for tasks requiring high-quality reasoning, structured outputs, or where latency is less critical than accuracy, such as complex coding, data analysis, planning, or high-quality evaluations.

9. What is next word prediction, and why has it been a successful scaling paradigm for language models?

Next word prediction involves training models to predict the next token in a sequence, enabling them to learn diverse skills like grammar, world knowledge, and reasoning. Its success lies in its ability to facilitate multitask learning, improving model capabilities as they scale.

10. What is the phenomenon of emergence in language models, and can you provide an example?

Emergence refers to unexpected capabilities appearing in language models as they scale. For instance, a model might develop the ability to translate languages without explicit training, showcasing the unpredictable yet powerful nature of scaling.

11. How does the analogy of "System 1" and "System 2" thinking help in understanding AI reasoning models?

"System 1" represents fast, intuitive thinking, akin to next word prediction, while "System 2" involves slow, deliberate reasoning, similar to CoT prompting. This analogy helps illustrate the different processing styles and applications of chat versus reasoning models.

12. What are the key components involved in scaling reinforcement learning with Chain of Thought?

Key components include training data with correct answers, a model capable of generating solutions, and a grader to verify output correctness. Reinforcement learning rewards correct outputs, adjusting model weights to improve reasoning.

13. What does the saturation of benchmarks signify about AI model progress?

Saturation indicates that models are mastering evaluation metrics quickly, highlighting rapid advancements in AI capabilities. It suggests that benchmarks may need updating to effectively measure further progress.

14. Why is it important to tailor prompting strategies to different types of language models?

Tailored prompting ensures models operate optimally, enhancing performance and output quality. For example, reasoning models benefit from clear goals and context, while chat models thrive on step-by-step guidance.

15. How does the "reasoning effort" parameter affect the behavior of reasoning models?

The "reasoning effort" parameter adjusts the depth of reasoning, influencing response speed and quality. Higher effort results in slower, more detailed outputs, suitable for complex tasks requiring thorough analysis.

16. How can reasoning models be used with structured outputs, and why is this valuable?

Reasoning models can generate structured outputs by adhering to predefined schemas, ensuring data is machine-readable. This is valuable for applications needing precise data formats, enhancing integration with other systems.

17. Beyond coding, where else can AI reasoning models be particularly effective?

AI reasoning models excel in planning workflows, deep data analysis, research, and generating comprehensive reports. Their ability to perform complex reasoning makes them ideal for strategic planning and insightful analysis.

18. What are the key differences in interaction modes between chat and reasoning models?

Chat models are interactive and context-driven, ideal for quick exchanges. Reasoning models focus on in-depth tasks, operating autonomously with higher latency, making them suitable for background processing and complex problem-solving.

19. How have scaling paradigms evolved in language models?

Scaling has shifted from next word prediction to reinforcement learning with CoT, enhancing reasoning capabilities. While next word prediction supports multitask learning, CoT focuses on generating step-by-step solutions, offering deeper insights.

20. What ethical considerations arise with the widespread adoption of AI reasoning models?

Ethical considerations include data privacy, bias in model outputs, and the potential impact on jobs. Ensuring transparency, fairness, and accountability in AI deployment is crucial to address these challenges responsibly.

21. What practical challenges might businesses face when implementing AI reasoning models?

Challenges include integrating models with existing systems, managing computational resources, and ensuring model outputs align with business goals. Addressing these requires strategic planning and technical expertise.

22. What are the future implications of AI reasoning models for various industries?

AI reasoning models can transform industries by automating complex tasks, enhancing decision-making, and improving efficiency. However, their adoption requires addressing ethical concerns and ensuring equitable access to technology.

23. How should AI reasoning models be evaluated to ensure they meet business needs?

Evaluation should focus on accuracy, efficiency, and alignment with business objectives. Regular testing against updated benchmarks and real-world scenarios ensures models remain effective and relevant.

24. What strategies can businesses use to integrate AI reasoning models effectively?

Businesses should start with pilot projects, gradually scaling up as they refine their understanding of model capabilities. Collaboration with AI experts and continuous learning are key to successful integration.

25. What resources are available for training employees on AI reasoning models?

Resources include online courses, workshops, and collaborations with AI research institutions. Investing in employee training ensures businesses maximize the potential of AI reasoning models in their operations.

Certification

About the Certification

Show the world you have AI skills—demonstrate expertise in applying advanced reasoning models to real-world scenarios. This certification establishes your practical knowledge, setting you apart in today’s data-driven landscape.

Official Certification

Upon successful completion of the "Certification: Proficient Application of AI Reasoning Models in Practice", you will receive a verifiable digital certificate. This certificate demonstrates your expertise in the subject matter covered in this course.

Benefits of Certification

- Enhance your professional credibility and stand out in the job market.

- Validate your skills and knowledge in cutting-edge AI technologies.

- Unlock new career opportunities in the rapidly growing AI field.

- Share your achievement on your resume, LinkedIn, and other professional platforms.

How to complete your certification successfully?

To earn your certification, you’ll need to complete all video lessons, study the guide carefully, and review the FAQ. After that, you’ll be prepared to pass the certification requirements.

Join 20,000+ Professionals, Using AI to transform their Careers

Join professionals who didn’t just adapt, they thrived. You can too, with AI training designed for your job.