Video Course: Vector Search RAG Tutorial – Combine Your Data with LLMs with Advanced Search

Enhance AI applications with our course on vector search and Retrieval Augmented Generation. Learn to integrate semantic search and Large Language Models for better data interaction.

Related Certification: Certification: Advanced Vector Search & RAG Integration with LLMs

Also includes Access to All:

What You Will Learn

- Understand vector embeddings and semantic search

- Generate embeddings using Hugging Face and OpenAI

- Store and index vectors with MongoDB Atlas Vector Search

- Build RAG pipelines using LangChain and LLMs

- Develop projects: semantic movie search, QA system, and RAG chatbot

Study Guide

Introduction

Welcome to the comprehensive guide on "Vector Search RAG Tutorial – Combine Your Data with LLMs with Advanced Search." This course is designed to provide you with an in-depth understanding of how to enhance the capabilities of Large Language Models (LLMs) by integrating them with vector search and Retrieval Augmented Generation (RAG). By the end of this course, you'll be equipped with the knowledge to implement advanced search functionalities that allow LLMs to retrieve and generate information grounded in relevant data. This tutorial is valuable for anyone looking to build AI-driven applications that require semantic understanding and retrieval of information.

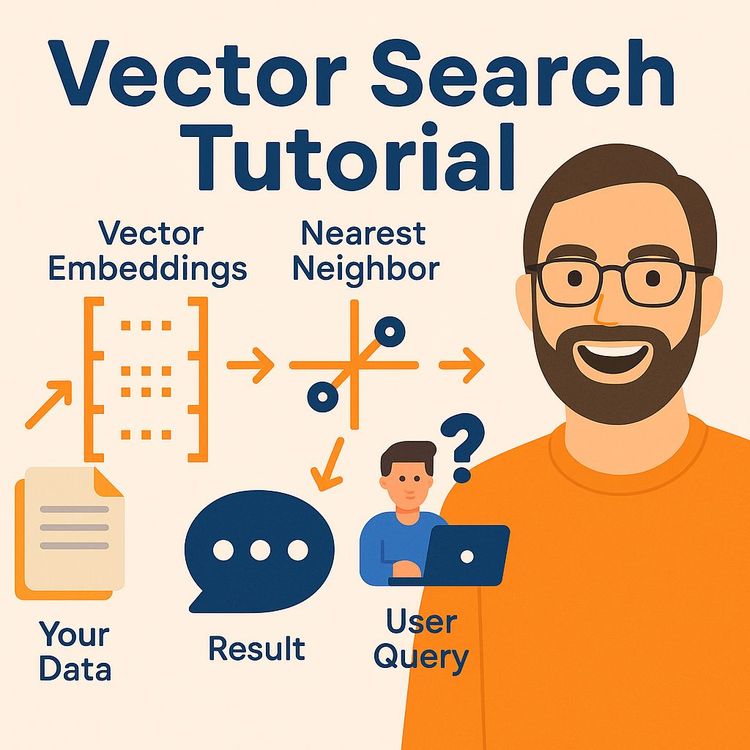

Understanding Vector Embeddings

Vector embeddings are the cornerstone of semantic search.

They represent data—such as words, images, or documents—as vectors (lists of numbers) in a high-dimensional space. The beauty of vector embeddings lies in their ability to map semantically similar items close to each other in this space. For instance, in a movie database, the plot of "Star Wars" might be closer to "Guardians of the Galaxy" than to "Titanic" due to thematic similarities.

Creating these embeddings involves using complex machine learning models trained on large datasets. These models learn to capture the essence of data items in numerical form. For practical applications, consider using pre-trained models like those from Hugging Face or OpenAI, which offer robust embeddings for various data types.

Vector Search: Searching by Meaning

Vector search is a powerful technique for retrieving information based on semantic similarity.

Unlike traditional keyword-based search, vector search evaluates the meaning and context of a query. By converting both the query and the database items into vector embeddings, it compares these vectors to find the most relevant results.

For example, if you search for "movies about space adventures," a vector search engine might return films like "Interstellar" or "The Martian," even if those exact words aren't in the database. This capability is crucial for applications requiring natural language processing, where user queries may not align perfectly with stored data.

MongoDB Atlas Vector Search: A Practical Implementation

MongoDB Atlas Vector Search is a key platform for implementing vector search in AI applications.

It allows you to store and query vector embeddings alongside traditional data, enabling semantic similarity searches using approximate nearest neighbours (ANN) algorithms. This integration facilitates the development of AI-powered applications that leverage the meaning of data.

For instance, in a semantic movie search application, you could store vector embeddings of movie plots in MongoDB Atlas. When a user queries the database, the system uses Atlas Vector Search to find semantically similar movies, enhancing user experience with more relevant results.

Project 1: Semantic Movie Search

The first project in our course demonstrates building a semantic search feature for movies. This project involves using Python, machine learning models (specifically all-many-LM-L6-V2 from Hugging Face for embedding generation), and Atlas Vector Search.

Here's how you can implement it:

- Connect to a MongoDB database containing movie data.

- Use the Hugging Face Inference API to generate vector embeddings for movie plots.

- Store these embeddings in the MongoDB database alongside the movie data.

- Create a vector search index in Atlas to enable semantic queries on the plot embeddings.

- Perform a vector search based on a natural language query, like "imaginary characters from outer space at war," to retrieve relevant movies.

This project showcases the practical application of vector search in enhancing user interactions with a database of complex data.

Limitations of Large Language Models

While LLMs are powerful, they have inherent limitations:

- Hallucinations: LLMs may generate factually inaccurate or ungrounded information.

- Knowledge Cut-off: LLMs are trained on static datasets, limiting their knowledge to the data's time horizon.

- Lack of Access to Local/Private Data: LLMs cannot utilize a user's specific data sources.

- Token Limits: LLMs have restrictions on the amount of text they can process in a single interaction.

Understanding these limitations is crucial for developing applications that rely on LLMs for accurate and relevant responses.

Retrieval Augmented Generation (RAG): Bridging the Gap

RAG is an architecture designed to address the limitations of LLMs.

It combines information retrieval with text generation to provide more informed and accurate responses. The process involves using vector search to retrieve relevant documents or data based on the user's query and providing these retrieved documents as context to the LLM.

For example, if a user queries an AI system about recent developments in AI technology, the system can use RAG to retrieve the latest articles or papers as context, allowing the LLM to generate a response grounded in current information.

RAG helps minimize hallucinations, provides access to more up-to-date information, and allows LLMs to leverage external knowledge bases.

Project 2: Question Answering with RAG

The second project focuses on building a question-answering application using the RAG architecture and Atlas Vector Search against custom data, such as text files on various topics.

Key technologies used include:

- LangChain: A framework for simplifying the creation of LLM applications by providing modular components and integrations.

- OpenAI API: Used for both embedding generation and accessing large language models (e.g., GPT-3.5 Turbo).

- Gradio: A Python library for building web interfaces for machine learning applications.

The project demonstrates loading data, generating embeddings using the OpenAI API, storing them in Atlas Vector Search, creating a vector search index, and then using LangChain to build a RAG pipeline. This pipeline retrieves relevant documents based on a user's question and provides them as context to the OpenAI LLM to generate an answer. The interface built with Gradio allows users to interact with the question-answering system.

Project 3: RAG-Powered Chatbot

The final project involves modifying a ChatGPT clone to answer questions about contributing to the freeCodeCamp.org curriculum using the official documentation. This project further demonstrates a real-world application of RAG with a larger dataset (freeCodeCamp documentation in Markdown format).

Here's the process:

- Clone a repository containing the ChatGPT clone and the freeCodeCamp documentation.

- Create embeddings for the freeCodeCamp documentation using the OpenAI API and LangChain.

- Store these embeddings in MongoDB Atlas.

- Create a vector search index on the embeddings.

- Modify the API routes of the ChatGPT clone to incorporate vector search.

- When a user asks a question, the system uses vector search to find relevant sections of the freeCodeCamp documentation and provides this context to the LLM to generate an answer grounded in the official information.

Key Takeaways

Throughout this course, we've explored the power of vector search and embeddings in enabling semantic understanding and retrieval of information. By integrating vector search with LLMs through the RAG architecture, we've seen how these technologies significantly enhance the capabilities of AI applications, providing access to external knowledge and mitigating issues like hallucinations and knowledge cut-offs.

MongoDB Atlas Vector Search provides a scalable and integrated platform for implementing vector search in AI applications. Frameworks like LangChain simplify the development of complex LLM applications by offering modular components for tasks like data loading, embedding generation, vector storage, retrieval, and question answering.

Building AI-powered applications that leverage vector search and RAG opens up numerous possibilities for creating more informative, accurate, and context-aware experiences.

Conclusion

Congratulations on completing the "Vector Search RAG Tutorial – Combine Your Data with LLMs with Advanced Search" course. You've gained a comprehensive understanding of how to implement vector search and RAG to enhance the capabilities of LLMs. These skills are invaluable for developing AI-driven applications that require semantic understanding and retrieval of information.

By thoughtfully applying these techniques, you can create AI systems that provide more accurate, relevant, and context-aware responses, ultimately delivering better user experiences. As you continue to explore and develop AI applications, remember the importance of grounding your models in real data and leveraging the power of vector search to unlock new possibilities.

Podcast

There'll soon be a podcast available for this course.

Frequently Asked Questions

Welcome to the FAQ section for the 'Video Course: Vector Search RAG Tutorial – Combine Your Data with LLMs with Advanced Search'. This resource is designed to address common questions about integrating vector search and Retrieval Augmented Generation (RAG) with large language models (LLMs). Whether you're a beginner exploring these technologies or an experienced practitioner, you'll find answers that clarify concepts, address challenges, and provide practical implementation advice.

What are vector embeddings and why are they useful for working with large language models (LLMs)?

Vector embeddings are numerical representations of data items (like words, sentences, or documents) where similar items are located close to each other in a multi-dimensional space. They are like a digital way of describing things as a list of numbers (vectors). These vectors allow computers to understand the semantic relationships between different pieces of data. For LLMs, vector embeddings are crucial because they enable semantic search, allowing the LLM to find relevant information based on the meaning of a query rather than just keyword matches. This helps in tasks like retrieving relevant context for question answering or finding similar content in a large dataset.

What is vector search and how does it differ from traditional keyword-based search?

Vector search is a method of finding information based on the meaning and context of a query, rather than exact keyword matches. It works by converting both the search query and the items in a database into vector embeddings. The search then compares these vectors to find items that are most similar to the query vector, using mathematical measures like cosine similarity or dot product. Traditional keyword-based search, on the other hand, looks for the presence of specific words in a document. Vector search, or semantic search, can retrieve more relevant results even if the exact keywords are not present, as long as the meaning is similar.

What is MongoDB Atlas Vector Search and how does it facilitate building AI-powered applications with LLMs?

MongoDB Atlas Vector Search is a feature within the MongoDB Atlas data platform that allows users to store and query vector embeddings alongside their other data. It enables performing semantic similarity searches on this vector data using approximate nearest neighbours (ANN) algorithms, making it efficient to find relevant information in large datasets. By integrating Atlas Vector Search with LLMs, developers can build AI-powered applications that can understand natural language queries, retrieve relevant context from their own data, and generate more informed and accurate responses. Atlas provides a platform to manage both the application data and the vector embeddings, simplifying the development process.

What is Retrieval Augmented Generation (RAG) and how does it address the limitations of large language models?

Retrieval Augmented Generation (RAG) is an architecture designed to enhance the capabilities of LLMs by grounding their responses in external, factual information. LLMs have limitations such as the potential for generating inaccurate information (hallucinations), having outdated knowledge due to their static training data, and lacking access to a user's private data. RAG addresses these issues by first using vector search to retrieve relevant documents based on a user's query. These retrieved documents are then provided as context to the LLM, which uses this information to generate a more accurate and informed response. This helps minimise hallucinations by grounding the answers in real data, allows the model to access up-to-date information, and enables the use of external knowledge bases.

What is LangChain and how does it simplify the development of LLM applications?

LangChain is a framework designed to streamline the creation of applications powered by LLMs. It provides a modular and standardised interface for building 'chains' of components that work together to process language tasks. LangChain offers integrations with various tools (including vector stores like MongoDB Atlas Vector Search and LLM providers like OpenAI), standardises interactions, and provides end-to-end chains for common use cases like question answering. This simplifies complex application development, debugging, and maintenance by allowing developers to easily connect different components for tasks such as understanding queries, retrieving relevant data, and generating responses.

How can I use my own data to build a question-answering system using vector search and RAG?

To build a question-answering system with your own data, you would typically follow these steps:

Prepare your data: Organise your data into documents or chunks of text.

Create vector embeddings: Use an embedding model (like those from OpenAI or Hugging Face) to convert your data into vector embeddings.

Store embeddings: Store these embeddings in a vector database or a vector search index, such as MongoDB Atlas Vector Search, alongside or linked to your original data.

Implement retrieval: When a user asks a question, convert the question into a vector embedding using the same model. Perform a vector search to find the most relevant data based on the similarity of the query embedding to the embeddings in your database.

Generate the answer (RAG): Take the retrieved relevant data and the original question, and feed them to an LLM. The LLM will use the retrieved context to generate a more informed and accurate answer to the user's question. Frameworks like LangChain can help manage this entire process.

What are some practical applications of combining vector search and LLMs with your own data, as demonstrated in the sources?

The sources demonstrate several practical applications:

Semantic movie search: Using natural language queries to find movies based on similarities in their plots, going beyond simple keyword matching.

Question answering over custom data: Building applications that can answer questions using the information contained within specific documents or datasets (e.g., answering questions about MongoDB features or aerodynamics from provided text files).

Enhanced chatbots: Creating chatbots that can answer questions based on specific documentation or knowledge bases, providing more accurate and contextually relevant responses (e.g., a chatbot answering questions about contributing to the freeCodeCamp.org curriculum).

Sentiment analysis and precise answer retrieval: Using the combined technologies to not only find relevant information but also to summarise, analyse sentiment, and extract precise answers from large volumes of text.

What considerations should I keep in mind when choosing an embedding model and setting up a vector search index?

When selecting an embedding model, consider factors such as the model's ability to capture the semantic meaning of your data, the dimensionality of the embeddings it produces, the cost of using the model (if it's a paid API), and the rate limits if using a free API. For setting up a vector search index, important considerations include:

The field to index: Ensure you are indexing the correct field that contains your vector embeddings.

Dimensionality: Specify the correct number of dimensions of your vectors in the index configuration.

Similarity metric: Choose an appropriate similarity metric (e.g., cosine, dot product, Euclidean) based on the normalisation of your vectors and the type of similarity you want to measure.

Index type: Select the correct vector search index type (e.g., KNN vector) to enable efficient similarity searches.

Performance and scale: Consider the size of your dataset and the performance requirements of your application, as these factors might influence the choice of indexing algorithms and configuration parameters.

How are vector embeddings generated for text data?

Vector embeddings for text data are generated using machine learning models that have been trained on large datasets. These models learn to encode the semantic meaning of the data, transforming it into numerical vectors. Popular services like the Hugging Face Inference API and OpenAI API offer pre-trained models that can be used to generate these embeddings. The process involves inputting text data into the model, which then outputs a vector that represents the semantic content of the input.

Why is vector search important for achieving semantic search?

Vector search is crucial for semantic search because it allows for the comparison of data based on meaning rather than exact keyword matches. By converting both queries and data into vector embeddings, vector search can identify relevant results based on semantic similarity. This capability is essential for applications that require understanding and responding to the intent behind a query, rather than just matching keywords.

What are hallucinations in the context of Large Language Models and how does RAG attempt to mitigate them?

Hallucinations in Large Language Models refer to the generation of factually incorrect or nonsensical information. This occurs when the model produces outputs that are not grounded in its training data or the provided context. RAG attempts to mitigate hallucinations by grounding the LLM's responses in factual information retrieved from a knowledge base using vector search. By providing relevant context, RAG helps ensure that the model's outputs are more accurate and reliable.

How does MongoDB Atlas Vector Search integrate with other tools and frameworks for building AI applications?

MongoDB Atlas Vector Search integrates seamlessly with various tools and frameworks to facilitate the development of AI applications. It allows developers to store and query vector embeddings, enabling efficient semantic similarity searches. This integration is particularly powerful when used with frameworks like LangChain, which can manage the complex processes involved in building LLM applications. Additionally, it supports APIs like Hugging Face and OpenAI for generating embeddings, making it a versatile choice for developers looking to build sophisticated AI solutions.

What are the ethical implications and potential challenges associated with using vector search and RAG in real-world applications?

Using vector search and RAG in real-world applications raises several ethical considerations and challenges. Ensuring the accuracy and reliability of AI-generated responses is critical, as users may rely on this information for decision-making. Developers must also be mindful of bias in the data and models used, as this can lead to unfair or discriminatory outcomes. Privacy concerns are another important aspect, particularly when handling sensitive or personal data. To address these challenges, developers should implement robust validation processes, regularly update models and data, and adhere to ethical guidelines and best practices in AI development.

What are some common challenges developers face when implementing vector search and RAG?

Developers often encounter several challenges when implementing vector search and RAG. These include selecting the right embedding model that accurately captures semantic meaning, managing the computational resources required for generating and storing vector embeddings, and optimising the performance of vector search queries in large datasets. Additionally, integrating these technologies with existing systems and ensuring data privacy and security can also be complex tasks. Overcoming these challenges typically involves careful planning, leveraging cloud-based solutions for scalability, and using frameworks like LangChain to streamline development.

Can you provide a real-world example of an application using vector search and RAG?

A real-world example of an application using vector search and RAG is an advanced customer support system. Such a system can use vector search to retrieve relevant support documents based on a customer's query, providing context for an LLM to generate accurate and helpful responses. This approach improves the efficiency of customer support by delivering precise information tailored to the customer's needs, reducing the time and effort required to find answers manually.

How should I choose between different embedding models like Hugging Face and OpenAI?

Choosing between embedding models like Hugging Face and OpenAI depends on several factors. Consider the specific capabilities and performance of each model in capturing the semantic meaning relevant to your application. Evaluate the cost and licensing terms, as some models may be more affordable for large-scale use. Additionally, assess the ease of integration with your existing systems and the availability of support and documentation. Testing multiple models to compare their outputs on your specific dataset can also provide valuable insights into which model best meets your needs.

What are the different similarity metrics used in vector search, and how do they affect search results?

Common similarity metrics used in vector search include cosine similarity, dot product, and Euclidean distance.

Cosine similarity measures the cosine of the angle between two vectors, indicating how similar they are regardless of their magnitude.

Dot product assesses similarity by calculating the sum of the products of corresponding vector components.

Euclidean distance measures the straight-line distance between two vectors, focusing on their spatial separation.

The choice of metric affects search results by influencing how similarity is defined and calculated, which can impact the relevance of retrieved items based on the specific characteristics of your data and application.

What are the key components of a RAG system?

A RAG system consists of several key components:

Vector Embedding Model: Converts data and queries into vector embeddings for semantic comparison.

Vector Search Engine: Utilises vector embeddings to retrieve the most relevant documents from a knowledge base.

Large Language Model (LLM): Generates responses based on the retrieved context and the original query.

Knowledge Base: A repository of documents or data that provide factual information for grounding LLM responses.

These components work together to enhance the accuracy and relevance of LLM-generated outputs by leveraging external knowledge and context.

How should I store vector embeddings for efficient retrieval?

Storing vector embeddings efficiently is crucial for fast retrieval. Use a vector database or a search index like MongoDB Atlas Vector Search that supports high-dimensional data and offers features like approximate nearest neighbours (ANN) algorithms for efficient querying. Ensure that the embeddings are stored alongside or linked to the original data for easy reference. Consider the dimensionality of your embeddings and choose appropriate indexing strategies to optimise performance and scalability, especially when dealing with large datasets.

How do embedding models differ from large language models (LLMs)?

Embedding models and large language models (LLMs) serve different purposes in natural language processing.

Embedding models focus on transforming data into vector embeddings that capture semantic meaning, enabling tasks like similarity search and clustering.

LLMs, on the other hand, are designed to understand and generate human-like text, performing tasks such as language translation, summarisation, and question answering.

While embedding models provide the foundational representations for data, LLMs leverage these embeddings and additional context to produce coherent and contextually relevant outputs.

Certification

About the Certification

Show the world you have AI skills—master advanced vector search and RAG integration with LLMs. This certification highlights your expertise in cutting-edge techniques powering smarter, context-aware applications.

Official Certification

Upon successful completion of the "Certification: Advanced Vector Search & RAG Integration with LLMs", you will receive a verifiable digital certificate. This certificate demonstrates your expertise in the subject matter covered in this course.

Benefits of Certification

- Enhance your professional credibility and stand out in the job market.

- Validate your skills and knowledge in cutting-edge AI technologies.

- Unlock new career opportunities in the rapidly growing AI field.

- Share your achievement on your resume, LinkedIn, and other professional platforms.

How to complete your certification successfully?

To earn your certification, you’ll need to complete all video lessons, study the guide carefully, and review the FAQ. After that, you’ll be prepared to pass the certification requirements.

Join 20,000+ Professionals, Using AI to transform their Careers

Join professionals who didn’t just adapt, they thrived. You can too, with AI training designed for your job.