About NexaSDK for Mobile

NexaSDK for Mobile is an SDK that runs multimodal AI models fully on-device for iOS and Android, taking advantage of Apple Neural Engine and Snapdragon NPUs. It aims to let developers add chat, vision, search, and audio capabilities with minimal code while avoiding cloud costs and keeping user data private.

Review

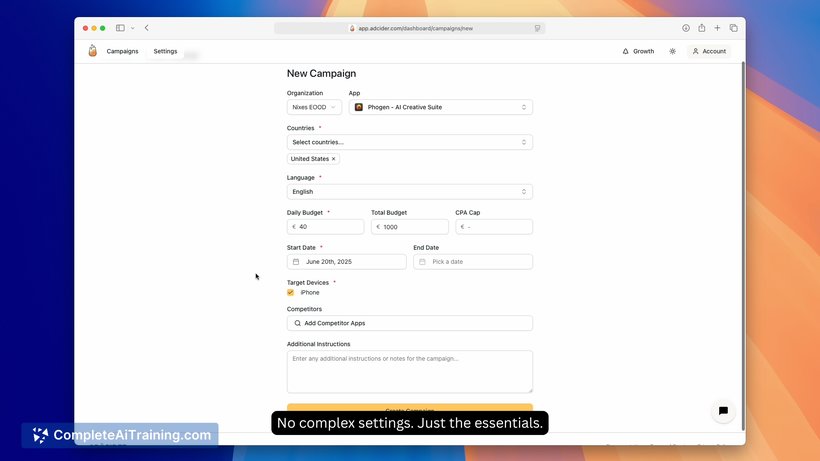

NexaSDK for Mobile focuses on practical on-device AI by routing work to available accelerators (NPU, GPU, CPU) through a runtime abstraction. The SDK promises quick integration-advertised as "three lines of code"-and emphasizes lower latency, reduced battery impact, and local data processing.

Key Features

- On-device multimodal support: text, vision, and audio models that run locally without sending user data to the cloud.

- Hardware-aware runtime: automatic detection of Apple Neural Engine, Snapdragon NPU, GPU, and CPU with backend routing and fallbacks.

- Performance and efficiency claims: approximately 2× faster inference and around 9× better energy efficiency on supported NPUs compared to non-accelerated runs.

- Quick developer onboarding: simple integration path and built-in model support for LLMs, multimodal models, ASR, embeddings, and OCR.

- Model conversion and quantization pipeline for making models compatible across devices (enterprise tooling for custom models is planned or available).

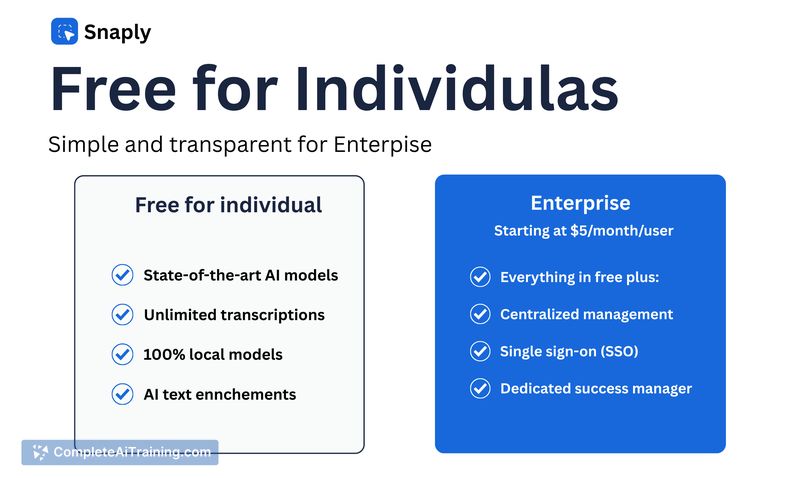

Pricing and Value

The SDK is offered free for individual developers, with charges applying to large enterprise adoption of NPU-accelerated inference. The value proposition centers on eliminating recurring cloud API costs, improving latency and battery life, and keeping sensitive user context private by running models locally. A token (NEXA_TOKEN) is used to activate the SDK and to validate certain NPU inference scenarios, and enterprise packages include additional support for bringing custom models.

Pros

- Strong privacy model since processing happens locally on the device.

- Single SDK for iOS and Android with automatic accelerator selection, simplifying cross-platform releases.

- Claims of meaningful speed and energy improvements on supported NPUs, which is valuable for mobile apps.

- Out-of-the-box support for a wide set of model types (LLM, VLM, ASR, embeddings, OCR).

- Free tier for individual developers lowers the barrier to experimenting with on-device AI.

Cons

- Performance depends on device hardware; older phones without NPUs will rely on GPU/CPU and will be slower.

- Some enterprise features (NPU inference at scale, model conversion tools) carry additional costs or require onboarding.

- Activation token is required for certain NPU use cases, which adds an extra step for setup and validation.

Overall, NexaSDK for Mobile is a practical option for teams that need private, low-latency multimodal features on mobile devices and want a single code path for iOS and Android. It fits best for apps handling sensitive user data, prototypes that need offline capabilities, and teams that target modern devices with NPUs; teams supporting older hardware or requiring unlimited enterprise NPU usage should plan for performance and licensing considerations.

Open 'NexaSDK for Mobile' Website

Your membership also unlocks: